Optimization for Economics, a Visual Approach

Mike Carr

Contents

0 Calculus Reference 3

0.1 Graphs of Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

0.2 Limits and Derivatives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

0.3 Multivariable Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1 Unconstrained Optimization 31

1.1 Single-Variable Optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

1.2 Concavity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

1.3 Multivariable Optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

2 Constrained Optimization 89

2.1 Equality Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

2.2 Inequality Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

2.3 The Kuhn-Tucker Conditions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

3 Comparative Statics 135

3.1 The Implicit Function Theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

3.2 The Envelope Theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

4 Sufficient Conditions 163

4.1 The Extreme Value Theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

4.2 The Bordered Hessian . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

4.3 Separation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

4.4 Concave Programming . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

4.5 Quasiconcavity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

1

Note to the Reader

For the last several years I have taught a course in optimization at Emory University for seniors

majoring in mathematics and economics. While mathematics and economics is the standard preparation

for a doctoral program in economics, the vast majority of students in this course are bound for careers

in finance. One of the challenges in designing this course has been balancing the interests and needs

of students on both tracks. These notes have evolved from my own teaching materials. Their goal is

to present methods for optimization and the reasoning behind them. This reasoning makes students

resilient to the myriad modifications that these tools endure at the hands of economists.

As an outsider to economics education, I was impressed to see the extent to which economics has

embraced visual methods of instruction. More than any other field whose foundation lies in mathematics,

the standard economics curriculum provides students not only with descriptions and formulas, but with

diagrams to depict core principles. Microeconomics students are inundated with supply curves, budget

lines, indifference curves, and graphs in marginal space. This is certainly a lifeline for visual learners,

but I suspect it produces deeper understanding for all.

As a geometer, my first inclination is to lean heavily upon visual reasoning when presenting the

methods of optimization. In our efforts to find a textbook for our admittedly niche curriculum, we have

not found a economics-focused text that takes this approach. I have made these notes available in the

hope that this visual viewpoint can become an effective supplement to existing techniques. Like with

the graphical arguments in undergraduate microeconomics, I think it can bring a complete and rigorous

understanding of optimization methods to a broader set of learners.

The audience for this text is anyone who wants to understand the mathematical methods for finding

maximizers in economic theory. The best-prepared reader will have mastered the techniques of differ-

entiation, including partial derivatives. They will also be familiar with the foundations of mathematical

logic: set notation, functions, and methods of proof like contradiction and the contrapostive. Finally,

they will need a basic proficiency in vector and matrix operations: sums, products and determinants. A

competent high-school treatment may suffice for this requirement.

Anyone who sets out to teach or learn the methods of optimization from this text should be aware

of its limitations. It does not contain the economic applications that my economist colleagues present

each semester. These must be provided in order to create genuine enthusiasm for the material. This is

a deficiency that I would like to rectify eventually, if there is some consensus about which examples to

include.

We have used one widely-available source of economic examples: Optimization for Economic Theory

by Avinash Dixit. This excellent book has a rigorous, traditional treatment of most of the material here

and good explanations of some advanced applications. Dixit’s book has proved too difficult for all but the

strongest undergraduates to read independently, but the examples are compelling and comprehensible

with some extra exposition.

Finally I would like to acknowledge my economist colleagues Blake Allison and Teddy Kim who were

essential partners in developing this course. Three students, Alexia Witthaus, Jacob Sugarmann and

Aarya Aamer, carefully read my early drafts and asked many questions. Their feedback allowed me to

identify the most opaque exposition (and clarify it, I hope). There is still a long road of revision and

improvement ahead for this document. I would appreciate any feedback you have.

Mike Carr

May 2023

Chapter 0

Calculus Reference

0.1

Graphs of Functions

Goals:

1 Graph algebraic functions.

2 Graph transformations of functions.

0.1.1

Graphs of Basic Functions

Definition 0.1

The graph of a function f(x) is the set of points (x, y) whose coordinates satisfy the equation y = f(x).

In this section we review several basic functions and their graphs. These will be important as examples

and counterexamples for our methods of optimization. In addition, knowing the shape of a graph is an

efficient way to memorize the behavior of functions that appear frequently in economic models.

Definition 0.2

Linear functions can be written in slope-intercept form:

f(x) = mx + b.

The graph of a linear function is a line.

m is the slope, which is the change in y over the change in x between any two points on the line.

(0, b) is the y intercept.

If we have the slope and a known point (a, b) on a line. We can write its equation in point-slope

form.

y − b = m(x − a)

4

Definition 0.3

A monomial is a function of the form:

f(x) = x

n

where n is an integer greater than 0.

For n ≥ 2 the graph y = x

n

curves upward over the positive values of x.

Greater values of n have lower values of f(x) when 0 < x < 1 but higher values when x > 1.

For even values of n the graph is symmetric across the y-axis, curving up when x is negative.

For odd values of n the graph curves down when x is negative. It is anti-symmetric across the

x = 0.

x

2

x

4

x

6

x

y

Figure 1: Graphs of even-powered

monomials

x

x

3

x

5

x

y

Figure 2: Graphs of odd-powered

monomials

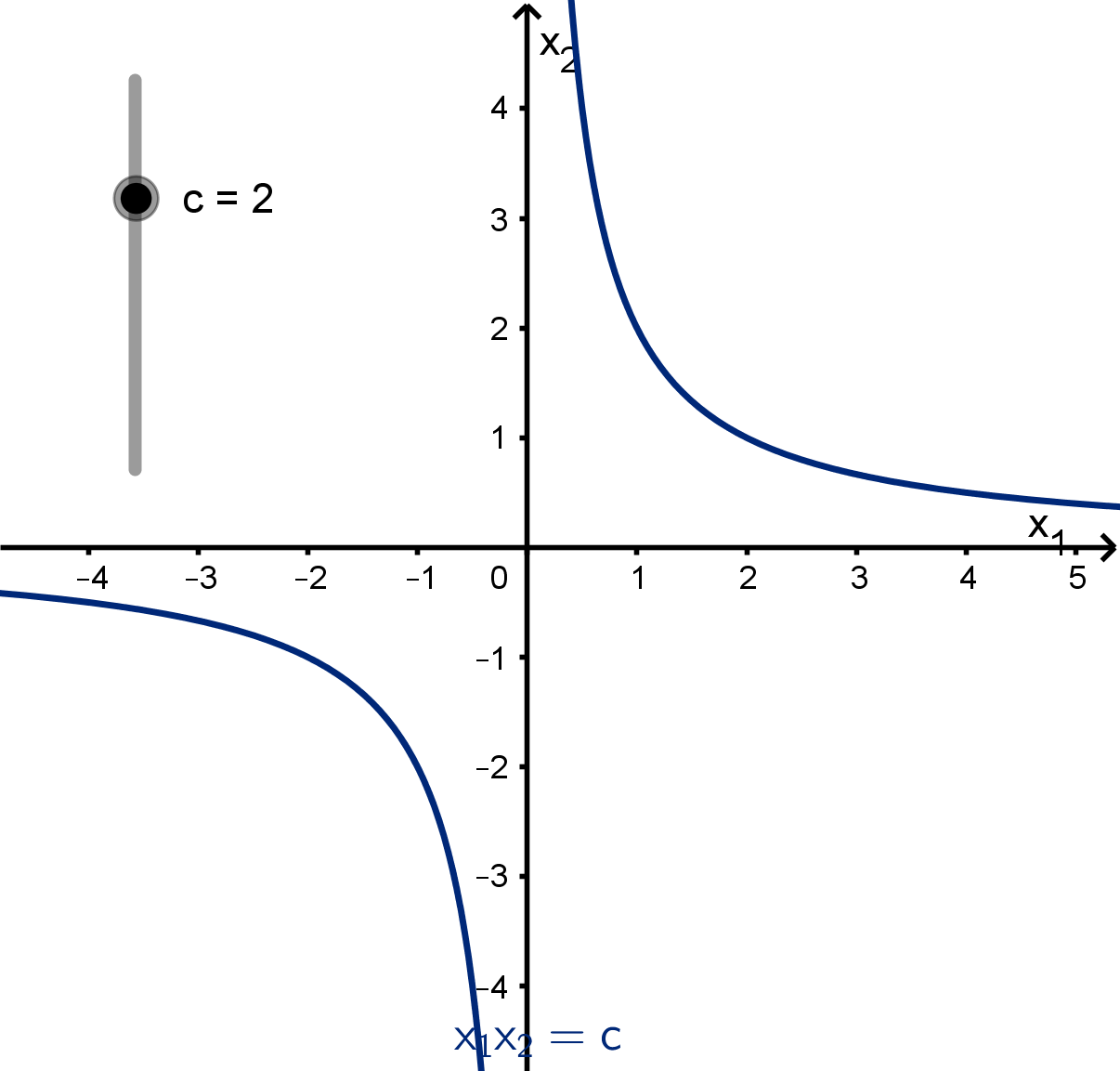

Monomials of Negative Power

Monomials of negative power have the form f (x) = x

−n

. They are also commonly written

f(x) =

1

x

n

.

The graph y =

1

x

n

has a vertical asymptote at x = 0.

The graph approaches the x-axis, y = 0 as x gets large.

For even values of n, the graph is above the x-axis.

For odd values of n, the graph is above the x-axis for positive x and below it for negative x.

A greater choice of n makes the function approach the x-axis more quickly.

5

0.1.1

Graphs of Basic Functions

x

y

Figure 3: Graphs of y = x

−2

, y = x

−4

and y = x

−6

x

y

Figure 4: Graphs of y = x

−1

, y = x

−3

and y = x

−5

Definition 0.4

A root function is a function of the form:

f(x) =

n

√

x

where n is an integer greater than 0.

The domain of

n

√

x is [0, ∞) if n is even and all real numbers if n is odd.

The x and y intercept of y =

n

√

x is at (0, 0).

Root functions are increasing. At x = 0, they travel straight up.

√

x

3

√

x

x

y

Figure 5: Graphs of root functions

6

Definition 0.5

An exponential function has the form:

f(x) = a

x

where a is a number greater than 0.

a is called the base of the exponential function.

The graph y = a

x

passes through (0, 1).

If a > 1 then f(x) increases quickly as x takes on positive values. Greater values of a give a

steeper increase. f(x) approaches 0 as x goes to −∞. Greater values of a give a faster approach.

The graph does not touch or cross the x-axis.

If a < 1, then the above is reversed.

e is a commonly used base. e is approximately 2.718.

x

y

Figure 6: Graphs of y = 2

x

, y = e

x

and y = 3

x

Definition 0.6

A logarithmic function has the form:

f(x) = log

a

x

where the base a is a number greater than 1. log

a

x is the number b such that a

b

= x. The natural

logarithm is the logarithm with base e. It is denoted f (x) = ln x.

a

b

can never be 0 or less. The domain of f(x) = log

a

x is (0, ∞).

As x approaches 0, log

a

x goes to −∞.

y = log

a

x has an x intercept at (1, 0).

7

0.1.1

Graphs of Basic Functions

y = log

a

x grows more and more slowly as x increases. This effect is more pronounced for larger

values of a.

x

y

Figure 7: Graphs of y = log

2

x, y = ln x and y = log

10

x

Logarithms and exponents are inverse functions. We solve exponential equations by applying a

logarithm to both sides. We solve logarithm equations by exponentiating both sides.

a

x

= c x = log

a

c

log

a

x = c x = a

c

0.1.2

Graphs of Transformations

Suppose we would like to transform the graph y = f(x). Here are four ways we can.

The graph of y = af(x) is stretched by a factor of a in the y direction.

The graph of y = f(x) + b is shifted by b in the positive y direction.

The graph of y = f(cx) is compressed by a factor of c in the x direction.

The graph of y = f(x + d) is shifted by d in the negative x direction.

We can perform multiple transformations on a single function.

8

Figure 8: The graph of y = f (x) and its transformation y = af (cx + d) + b

9

0.2

Limits and Derivatives

Goals:

1 Verify that a function is continuous.

2 Compute derivatives.

3 Use derivatives to understand graphs and vice versa.

0.2.1

The Limit of A Function

Calculus is the study of change. Our most important rate of change cannot be computed directly,

but exists only as a limit of rates.

Definition 0.7

The limit as x approaches a (or x → a) of a function f(x) is denoted lim

x→a

f(x).

lim

x→a

f(x) = L means that we can make f(x) arbitrarily close to L by restricting x to a small

enough neighborhood surrounding a.

If there is no L such that lim

x→a

f(x) = L, we say that lim

x→a

f(x) does not exist.

Remark

arbitrarily close means any amount of closeness demanded. We need to be able to make f(x)

within

1

10

of L, within

1

1000

of L, within

1

10000000

of L and so on. When proving that a limit

exists, mathematicians traditionally model this closeness with the variable ϵ. We indicate or verify

arbitrary closeness with the inequality |f (x) − L| < ϵ.

By a neighborhood we mean an open interval that contains a. The set {a} is not a neighborhood.

If it were, then every function would limit to f(a) as x → a. Mathematicians generally restrict to

neighborhoods of the form (a − δ, a + δ), then they need a way to produce a valid, positive δ for

any given positive ϵ.

10

Figure 9: A neighborhood of a that keeps f(x) within ϵ of L

0.2.2

Continuity

Limits give us a formal approach to defining continuity. Many of our results will rely on the fact that

a function is continuous.

Definition 0.8

A function f(x) is continuous at a, if

lim

x→a

f(x) = f(a).

f(x) can also be continuous on an interval or other set of points if it is continuous at each a in that

set. If it is continuous on R, we say f(x) is a continuous function.

Proving that a function is continuous requires us to verify its limit at every point a. This is too

much work for a case-by-case basis. Instead mathematics adopts a constructive approach. First we show

that a few basic functions are continuous. Next we prove that sums, differences, products and other

combinations preserve continuity.

11

0.2.2

Continuity

Theorem 0.9

The following functions are continuous on their domains

1 Constant functions

2 Linear functions

3 Polynomials

4 Roots

5 Exponential functions

6 Logarithms

7 Trigonometric functions

8 f(x) = |x|

Theorem 0.10

If f(x) and g(x) are continuous on their domains, then the following are also continuous on their

domains

1 f(x) + g(x)

2 f(x) − g(x)

3 f(x)g(x)

4

f(x)

g(x)

(note that any x where g(x) = 0 is not in the domain)

5 f(x)

g(x)

as long as f(x) > 0

6 f(g(x))

We can use these theorems together to argue that complicated functions are continuous.

12

Example

The function f(x) =

4

√

3x

2

− 17x + 2 −

e

x

log

5

x

is the difference of two functions. The first is a compo-

sition of a root function and polynomial (both continuous on their domains). The second is a quotient

of an exponential and a logarithm (both continuous on their domains). Thus f(x) is the difference of

two continuous functions and is continuous on its domain.

Remark

Just about any function we can write using algebraic expressions is continuous on its domain. This does

not mean it is continuous everywhere. f(x) =

1

x

is not continuous at x = 0, for example.

0.2.3

The Intermediate Value Theorem

One early intuition for continuity is that the graph of a continuous function can be drawn without

any breaks. There are many ways to formalize this idea. One of the most important is the following

theorem.

Theorem 0.11 [The Intermediate Value Theorem]

If f is a continuous function on [a, b] and K is a number between f(a) and f (b), then there is some

number c between a and b such that f(c) = K.

Intuitively, a continuous graph cannot get from one side of the line y = K to the other without

intersecting y = K. Notice that this theorem does not say exactly where this intersection must occur,

only that it must occur somewhere in the interval (a, b). It also does not rule out the possibility of more

than one such c existing.

Example

Show that f (x) = e

x

− 3x has a root between 0 and 1.

13

0.2.3 The Intermediate Value Theorem

Solution

A root is a number c such that f(c) = 0. To prove such a root exists, we check the conditions of the

intermediate value theorem.

f(x) is a sum of continuous functions, so it is continuous on its domain.

f(0) = 1

f(1) = e − 3 < 0

0 is between f(0) and f(1)

We conclude there is some c between 0 and 1 such that f (c) = 0.

−1

1 2

−1

1

2

y = e

x

− 3x

(0, 1)

(1, 3 − e)

c

x

y

Figure 10: A root of y = e

x

− 3x

0.2.4

The Derivative

The derivative is a method for measuring the rate of change of a function.

14

Definition 0.12

Given a function f(x), the derivative of f(x) at a is the number

lim

h→0

f(a + h) − f (a)

h

.

The derivative function of f(x) is the function

lim

h→0

f(x + h) − f (x)

h

.

Here are two different notations for the derivative at a.

1

df

dx

(a) (Leibniz)

2 f

′

(a) (Lagrange)

The ratio

f(a + h) − f (a)

h

can be interpreted two ways

1 The average rate of change of f between a and a + h

2 The slope of a secant line of y = f(x) from (a, f(a)) to (a + h, f(a + h))

Since the derivative is the limit of these, we interpret f

′

(a) as

1 The instantaneous rate of change of f at a

2 The slope of a tangent line to y = f(x) at (a, f(a))

Figure 11: A secant line and a tangent line to y = f (x)

15

0.2.4

The Derivative

We can take higher order derivatives by taking derivatives of derivatives. The derivative function

of f in this context is called the first derivative. Its derivative function is the second derivative. The

second derivative’s derivative function is the third derivative and so on.

Notation

The following notations are used for higher order derivatives

name Lagrange notation Leibniz notation

first derivative f

′

(x)

df

dx

second derivative f

′′

(x)

d

2

f

dx

2

third derivative f

′′′

(x)

d

3

f

dx

3

fourth derivative f

(4)

(x)

d

4

f

dx

4

fifth derivative f

(5)

(x)

d

5

f

dx

5

The sign of a higher order derivative tells us how the derivative of one order lower is changing. For

example if

d

5

f

dx

5

< 0, then

d

4

f

dx

4

is decreasing.

0.2.5

Computing Derivatives

The limit definition of a derivative is too unwieldy to use every time. Instead calculus students learn

the derivatives of some basic functions. They then use theorems to compute derivatives when those

functions are combined.

16

Derivatives of Basic Functions

d

dx

c = 0 (derivative of a constant is 0)

d

dx

x

n

= nx

n−1

for any n = 0 (The Power Rule)

d

dx

e

x

= e

x

d

dx

a

x

= a

x

ln a for a > 0

d

dx

ln x =

1

x

d

dx

log

a

x =

1

x ln a

The following rules allow us to differentiate functions made of simpler functions whose derivative we

already know.

Differentiation Rules

Sum Rule: (f(x) + g(x))

′

= f

′

(x) + g

′

(x)

Constant Multiple Rule: (cf(x))

′

= cf

′

(x)

Product Rule: (f(x)g(x))

′

= f

′

(x)g(x) + g

′

(x)f(x)

Quotient Rule:

f(x)

g(x)

′

=

f

′

(x)g(x)−g

′

(x)f(x)

(g(x))

2

unless g(x) = 0

Chain Rule: (f(g(x))

′

= f

′

(g(x))g

′

(x)

0.2.6

Computing a Derivative

Compute

d

2

dx

2

(4

√

75 − 3x

2

+ 10)

17

0.2.6

Computing a Derivative

Solution

We begin by computing the first derivative. We use the chain rule with 4x

1/2

+ 10 as the outer function

and 75 − 3x

2

as the inner function.

d

dx

(4

p

75 − 3x

2

+ 10) = 2(75 − 3x

2

)

−1/2

(−6x)

= −

12x

√

75 − 3x

2

We compute the second derivative by differentiating the first derivative. We use the quotient rule. We

need the chain rule again when we differentiate the denominator.

d

2

dx

2

(4

p

75 − 3x

2

+ 10) = −

d

dx

12x

√

75 − 3x

2

= −

d

dx

(12x)

√

75 − 3x

2

−

d

dx

(

√

75 − 3x

2

)(12x)

(

√

75 − 3x

2

)

2

= −

12

√

75 − 3x

2

+

3x

√

75−3x

2

(12x)

75 − 3x

2

= −

12

√

75 − 3x

2

+

36x

2

√

75−3x

2

75 − 3x

2

We can obtain a common denominator to simplify this expression.

d

2

dx

2

(4

p

75 − 3x

2

+ 10) = −

12(

√

75−3x

2

)

2

√

75−3x

2

+

36x

2

√

75−3x

2

75 − 3x

2

= −

12(75 − 3x

2

) + 36x

2

(75 − 3x

2

)

3/2

= −

900

(75 − 3x

2

)

3/2

18

0.3

Multivariable Functions

Goals:

1 Compute partial derviatives.

2 Recognize continuous multivariable functions.

3 Apply the chain rule to compositions of multivariable functions.

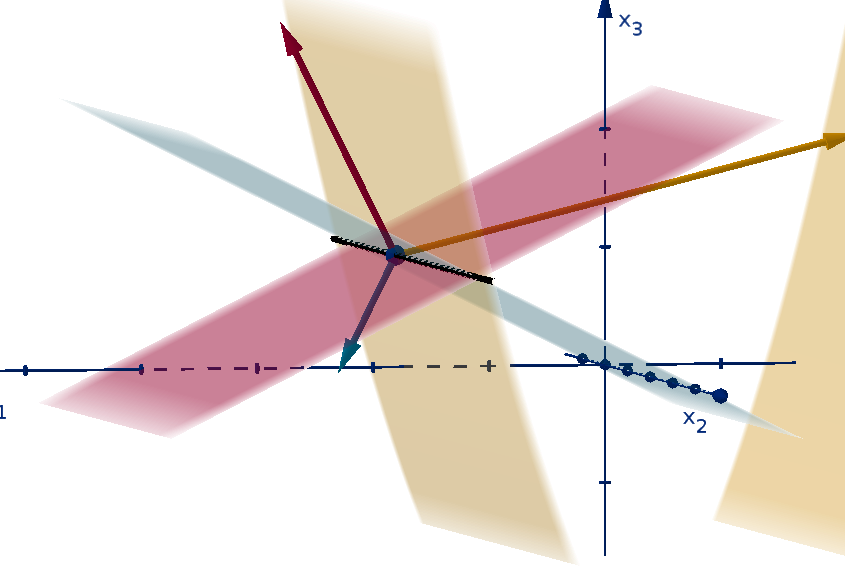

0.3.1

Multi-Variable Functions

Most interesting phenomena are not described by a single variable. We will need to develop methods

for optimizing multivariable functions. There are many ways to denote multivariable domains and the

functions on them. This is how we will denote them.

Notation

An n-vector is an ordered set of n numbers called components. For instance a = (5, −

√

17, 12.31)

is a 3-vector.

We add a vector arrow, as in a, to indicate that a is a vector.

The components of a vector can be denoted abstractly by subscripts: x = (x

1

, x

2

, . . . x

n

). The

x

i

do not have arrows because they are numbers, not vectors.

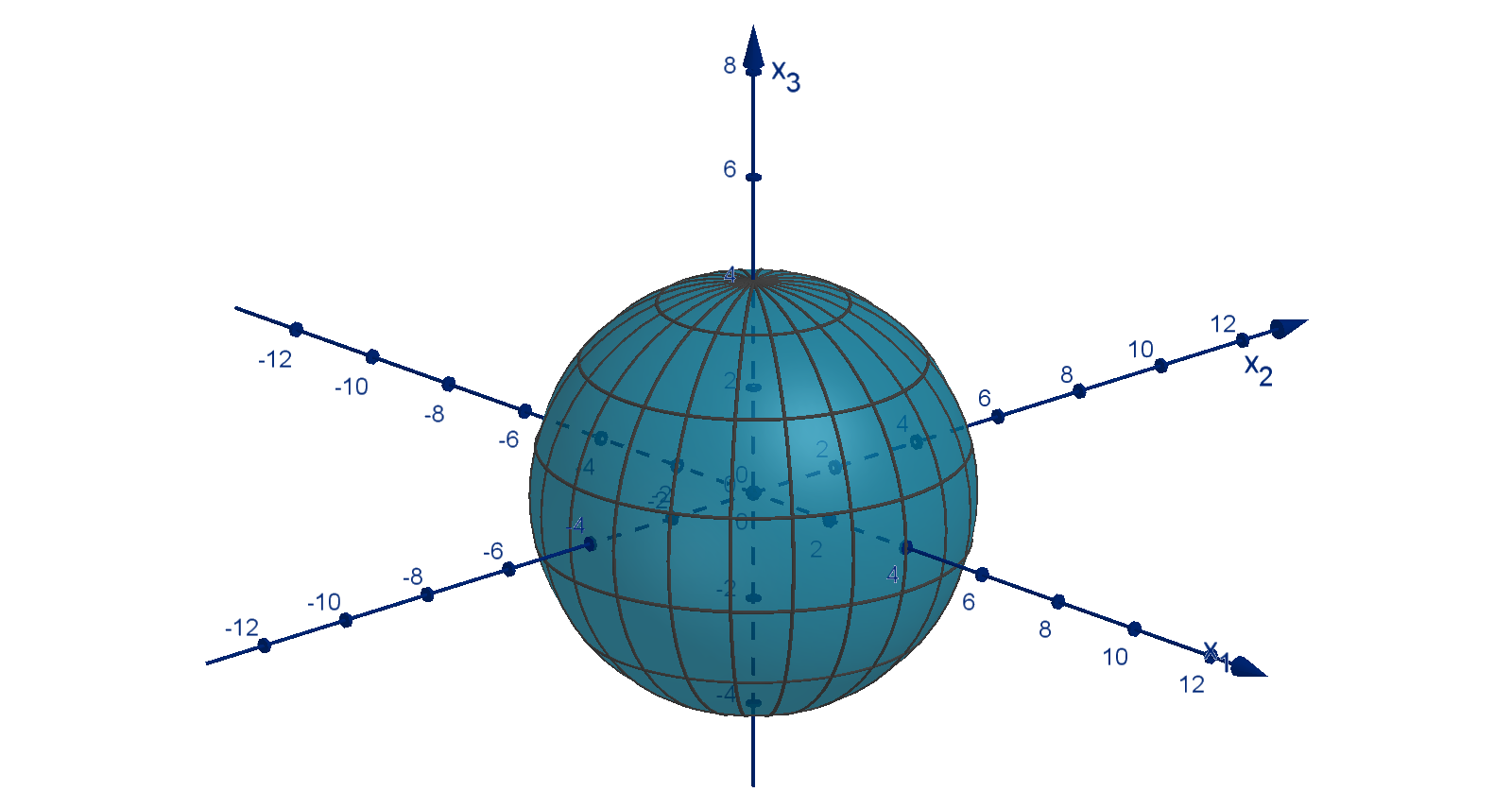

n-space, the set of all n-dimensional vectors, is denoted R

n

.

0 is the zero vector: (0, 0, 0, . . . , 0). The dimension should be clear from context.

The vectors e

1

, e

2

, . . . , e

n

are the standard basis vectors of R

n

. e

i

has 1 in the ith component

and 0 in the others. For example e

2

= (0, 1, 0, . . . , 0).

An n-variable function f (x

1

, x

2

, . . . , x

n

) can be written f(x).

19

0.3.1

Multi-Variable Functions

Remark

Using a common letter with an index variable

x

1

, x

2

, x

3

. . .

is a good choice for a large or an unknown number of variables. We will use this notation when developing

the theory of multivariable optimization. In many economics problems, there is a fixed, small number

of variables. In these problems, it is more convenient to use different letters for each variable, to avoid

keeping track of subscripts.

x, y, z, . . .

Even better, we should try to choose descriptive variable names like q for quantity or p for price.

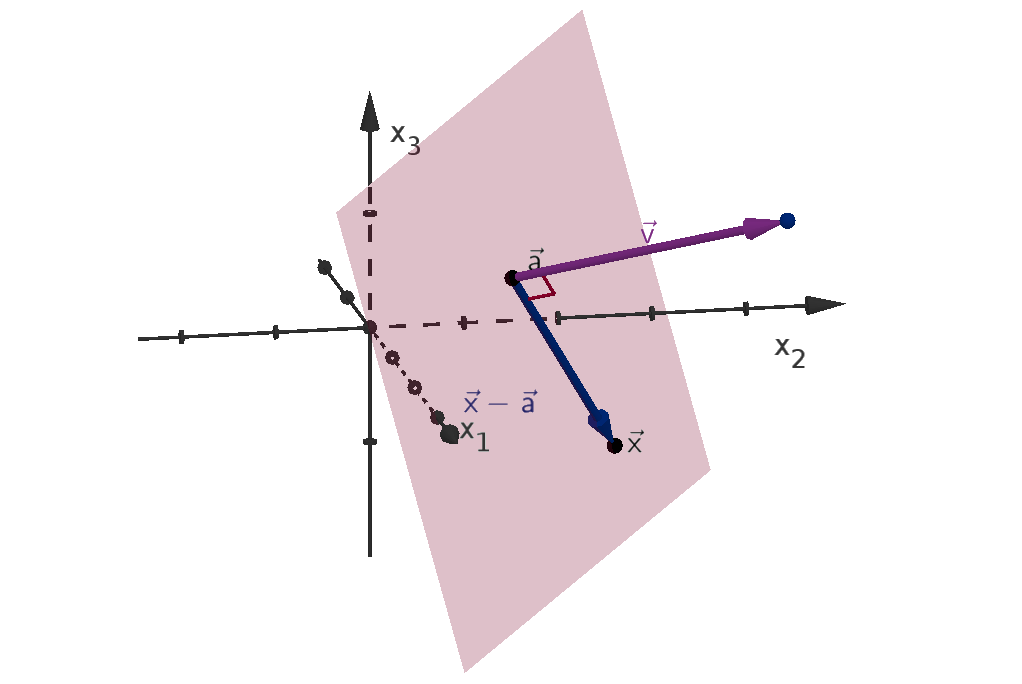

We visualize functions with their graphs. The height of the graph over a point in the domain

represents the value of the function at that point. This allows us to detect visually where the function

is large or small, increasing or decreasing.

Definition 0.13

Given an n-variable function f(x), the graph of f is the set of points (x

1

, x

2

, . . . , x

n

, y) in R

n+1

that

satisfy y = f(x

1

, x

2

, . . . , x

n

).

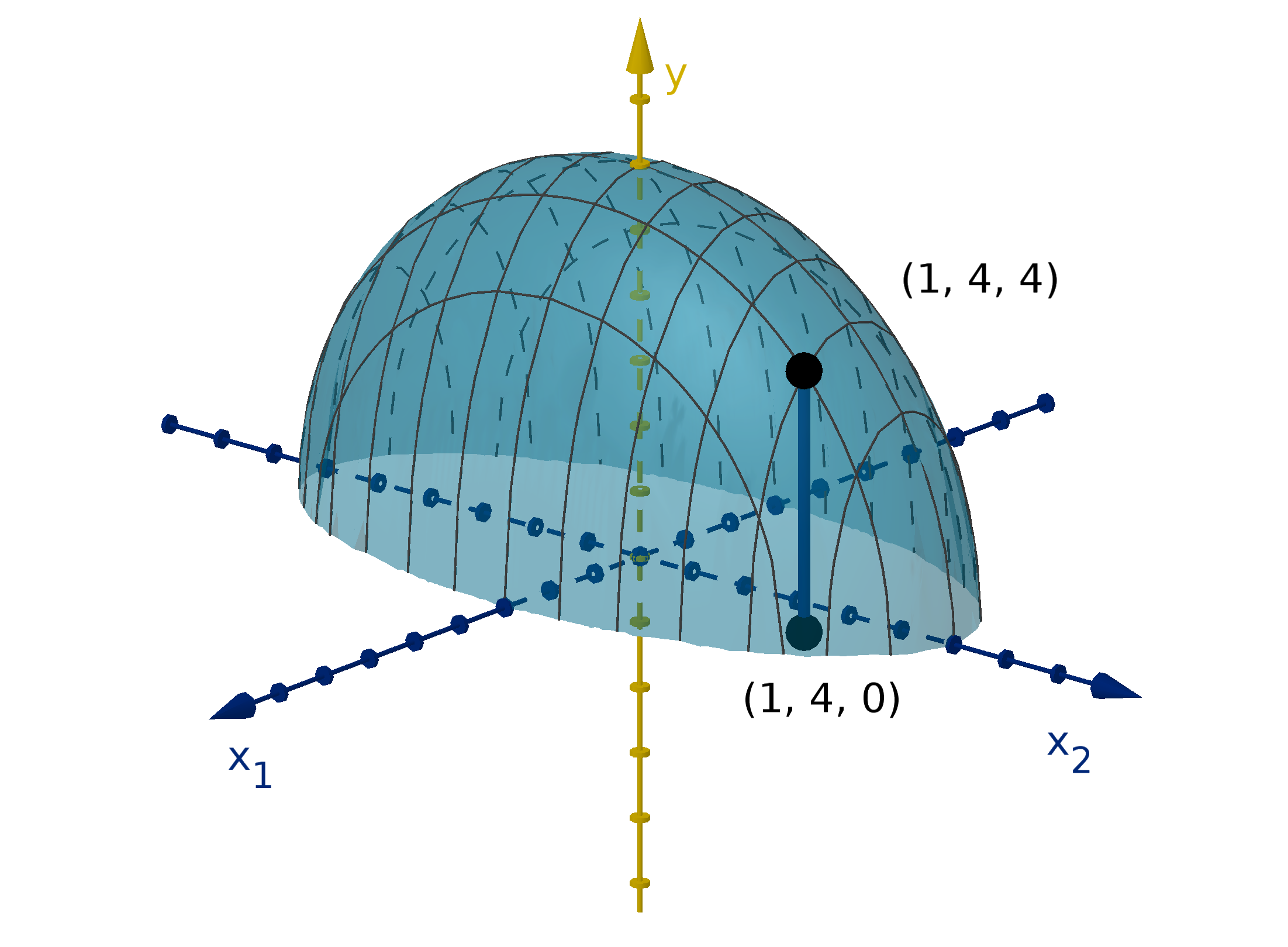

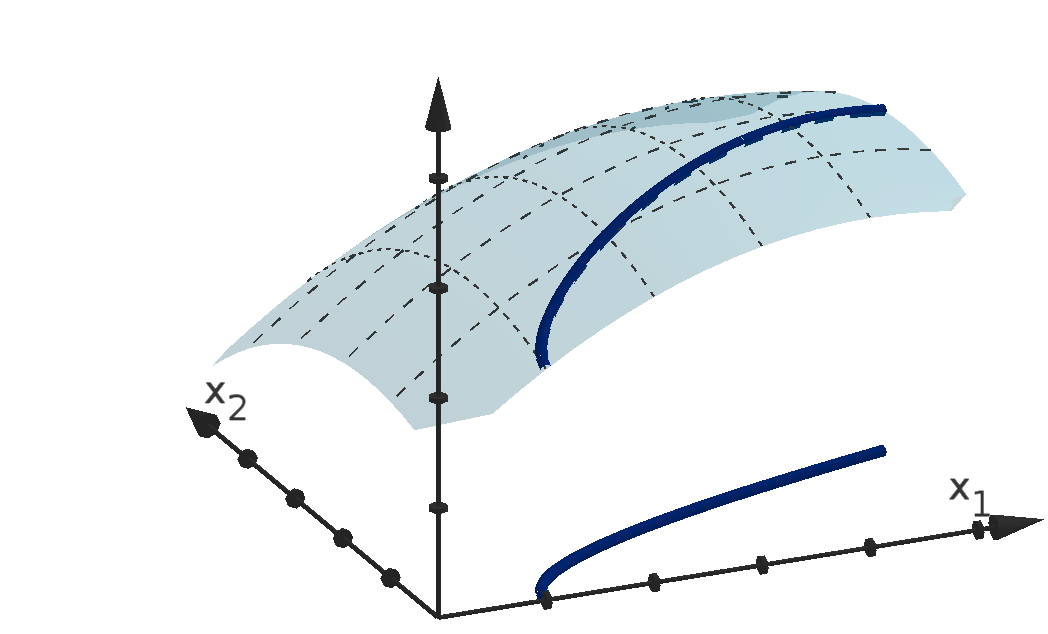

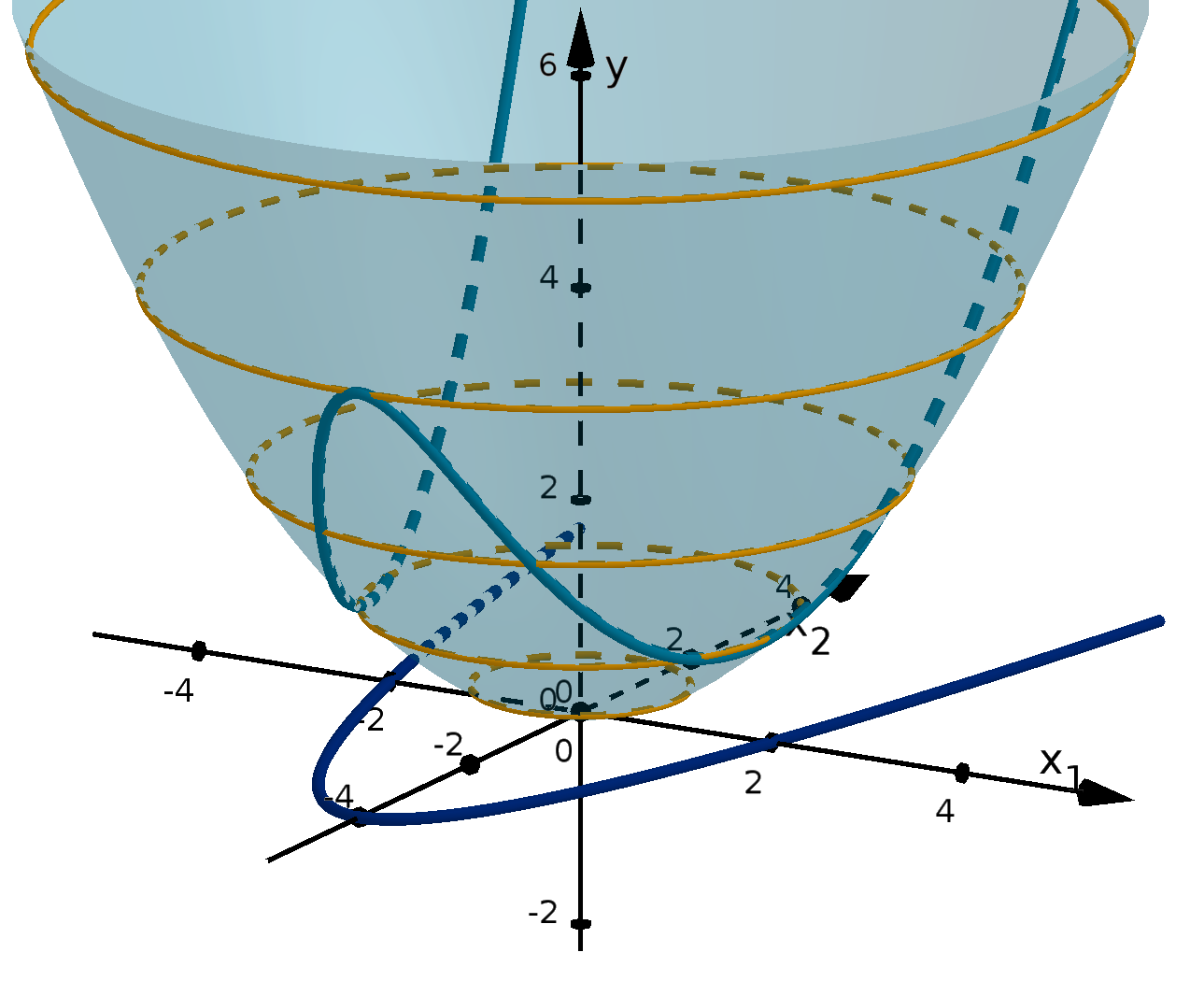

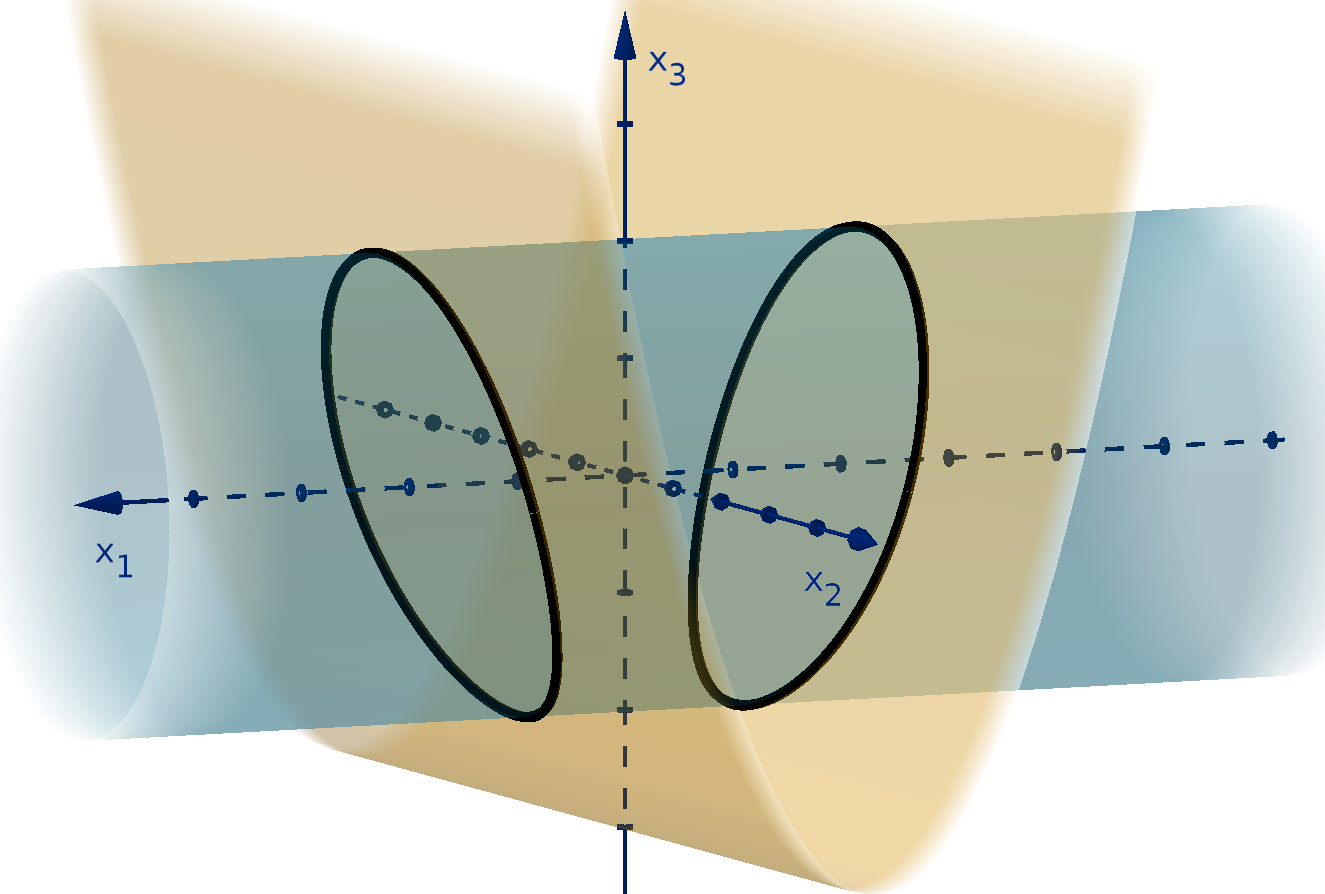

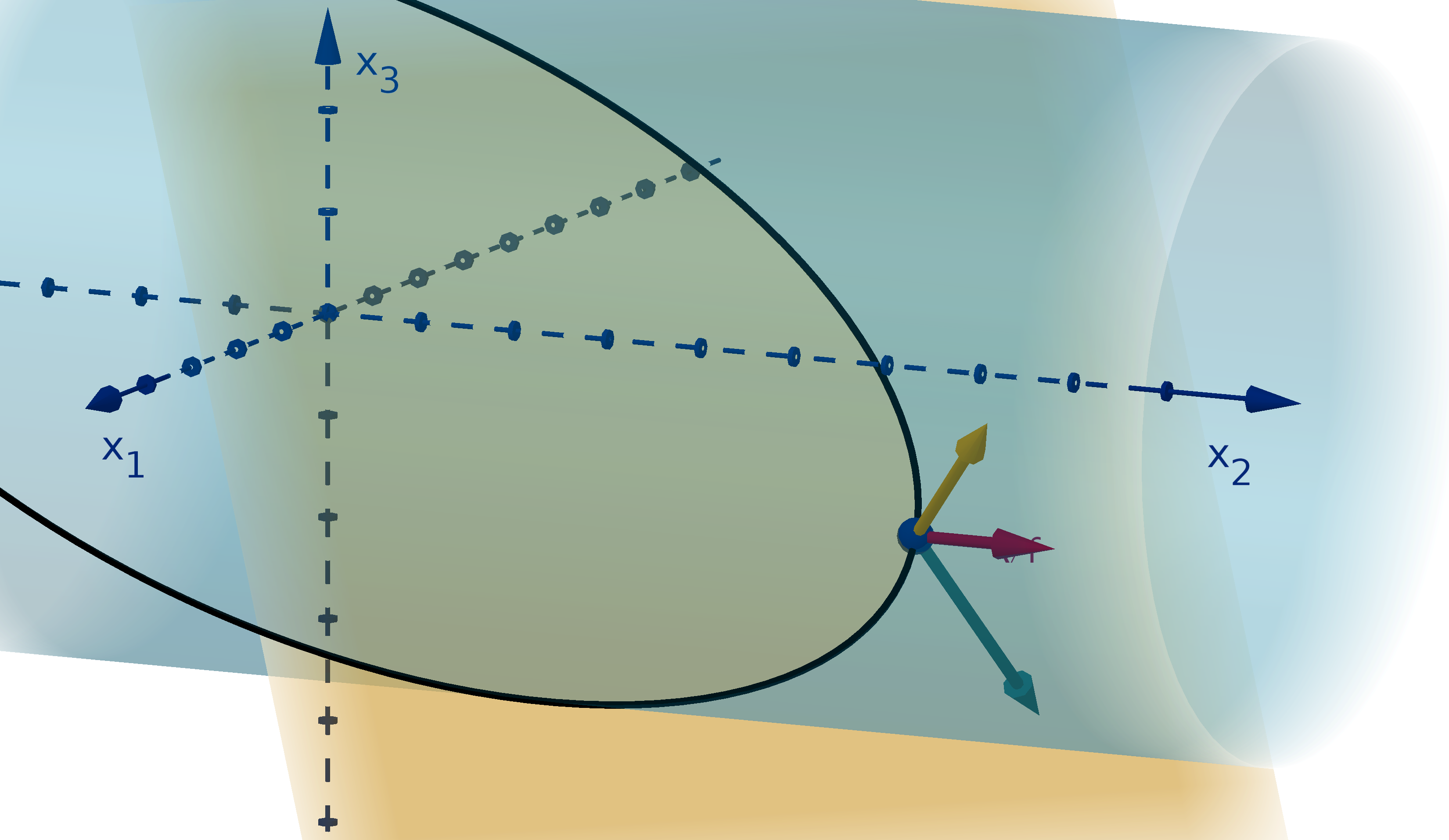

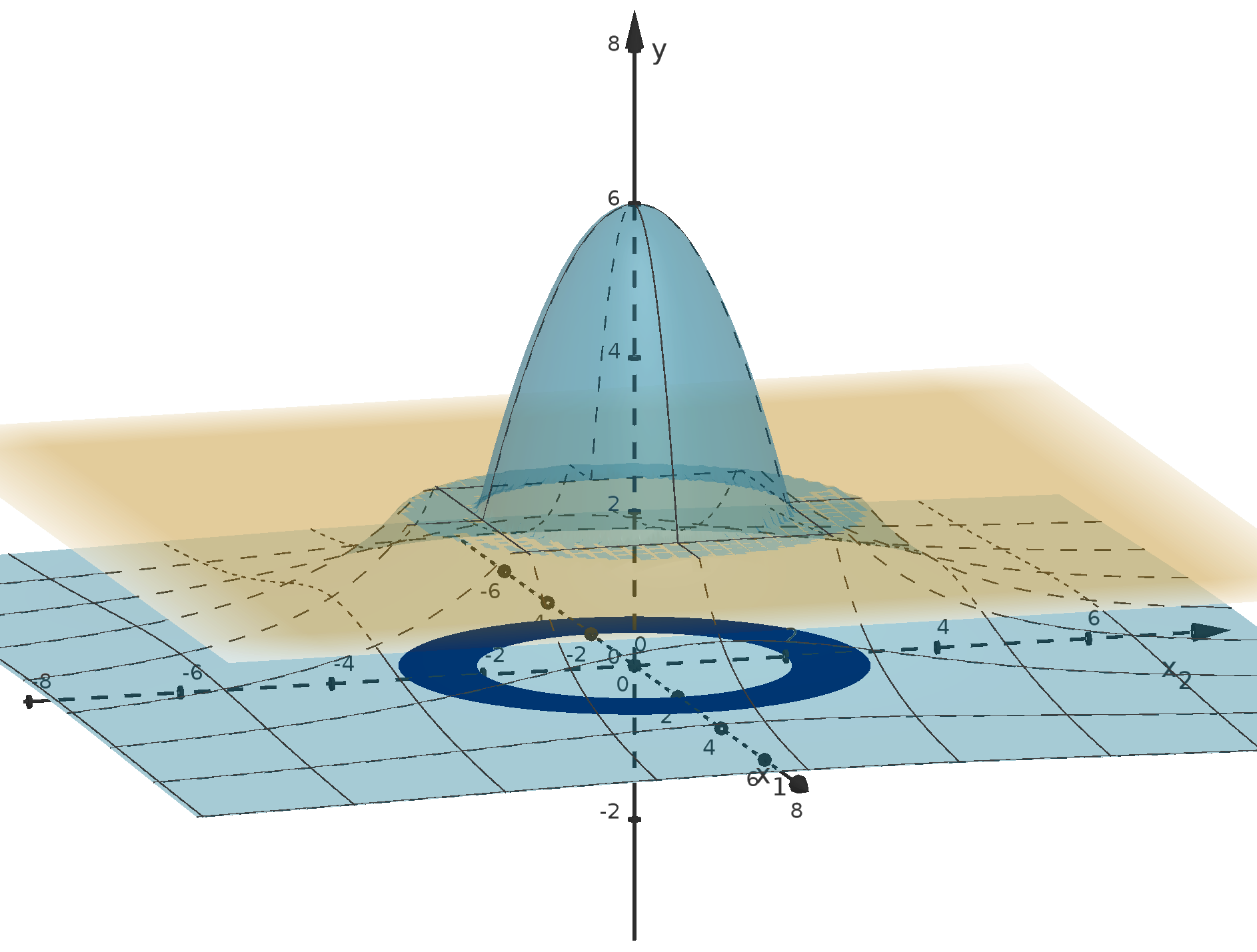

Figure 12: The graph y =

p

36 − 4x

2

1

− x

2

2

and the height showing f(1, 4).

20

Remark

In general, a graph of the form y = f (x

1

, x

2

) will be hard to visualize. For more than three variables,

this visualization becomes impossible. So why bother? Graphs are a useful visual aid to the reasoning

behind our methods. As we progress, it is useful to have an prototypical two-variable graph in your

head. You can apply our methods to that graph, whether or not you have an algebraic expression to go

with it.

0.3.2

Partial Derivatives

Our optimization tools rely on the ability to measure rates of change. For a function of multiple

variables, there are many rates of change, because there are many ways in which the input variables can

change. The simplest are those where only one variable is changing while the others remain fixed.

Definition 0.14

The partial derivative of f with respect to x

i

is a function of x. It measures the rate of change of f

at x as x

i

increases but the other coordinates remain constant. The formula is

lim

h→0

f(x + he

i

) − f (x)

h

Here are two different notations for the partial derivative.

1

∂f

∂x

i

(x) (Leibniz)

2 f

x

1

(x) (Lagrange)

Each has advantages, so we will use both. When it will not cause confusion, we can shorten Lagrange’s

notation from f

x

i

(x) to f

i

(x).

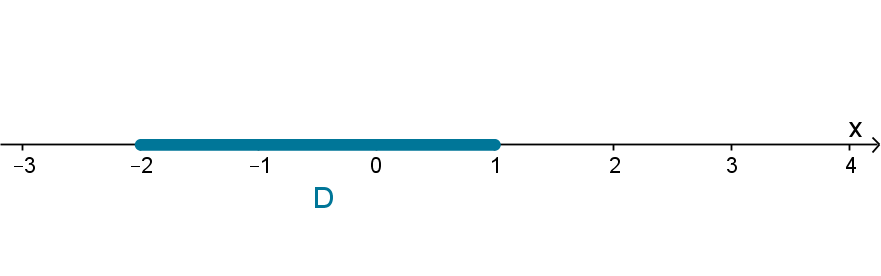

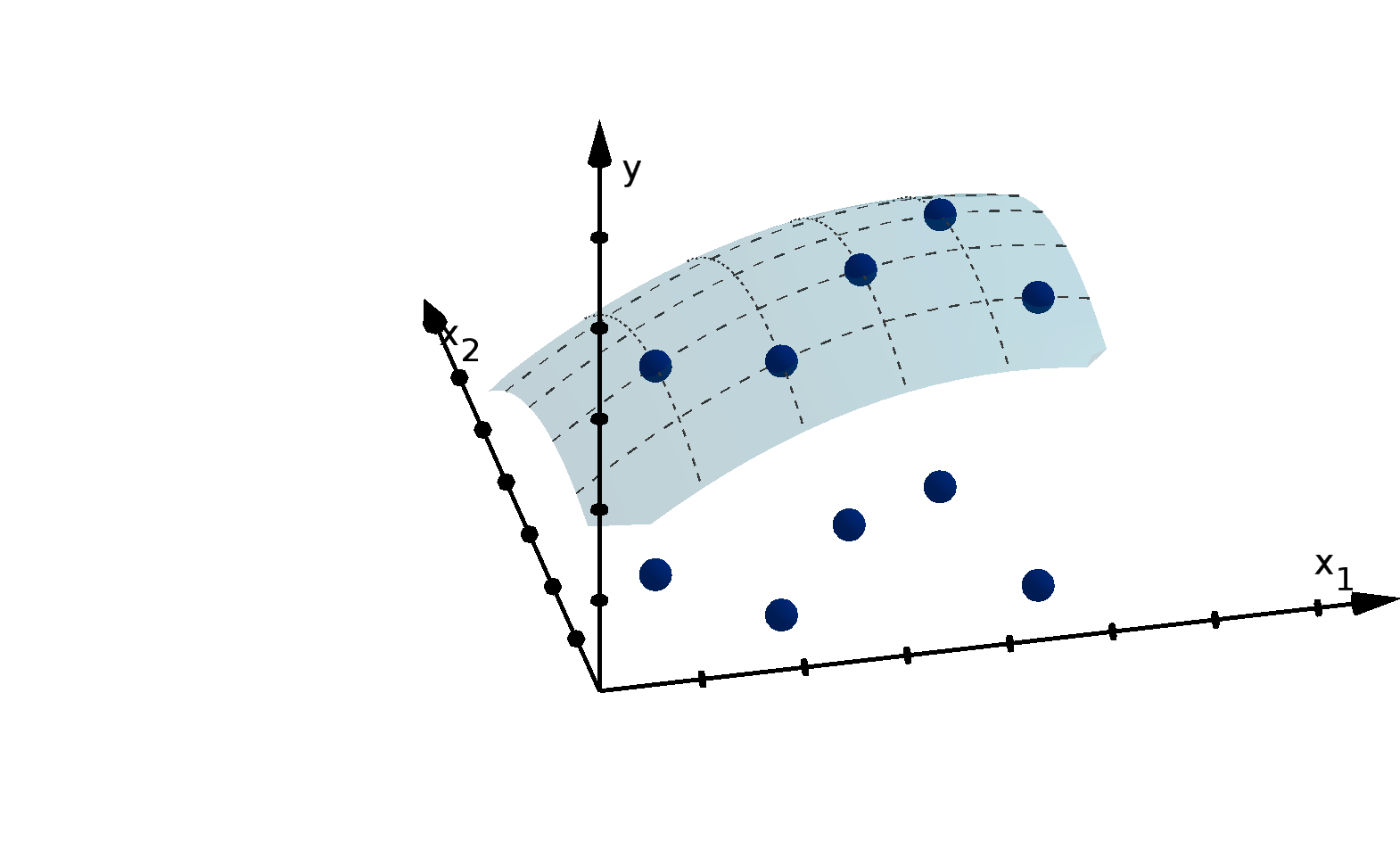

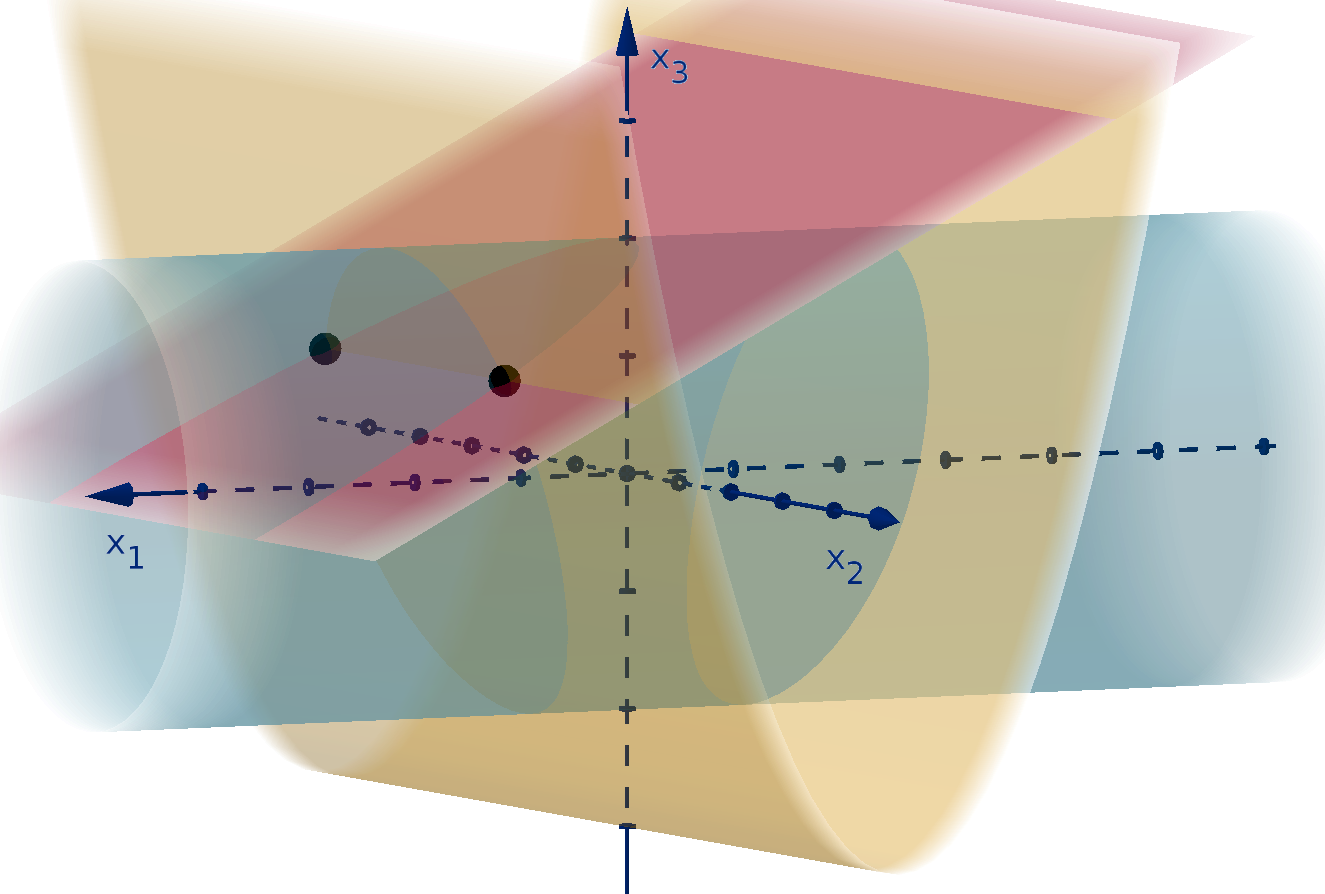

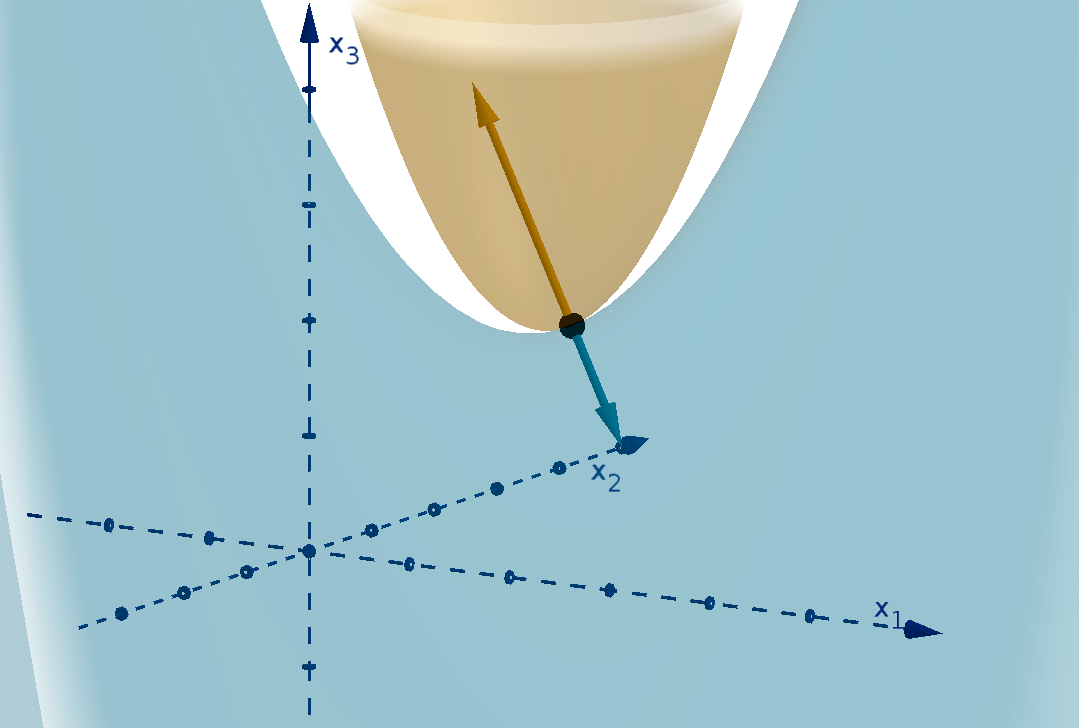

In the two variable case, we can interpret f

1

(x) as the slope of the tangent line to the graph

y = f(x

1

, x

2

) in the x

1

-direction. Higher-dimensional partial derivatives are also slopes, but are harder

to visualize.

21

0.3.2

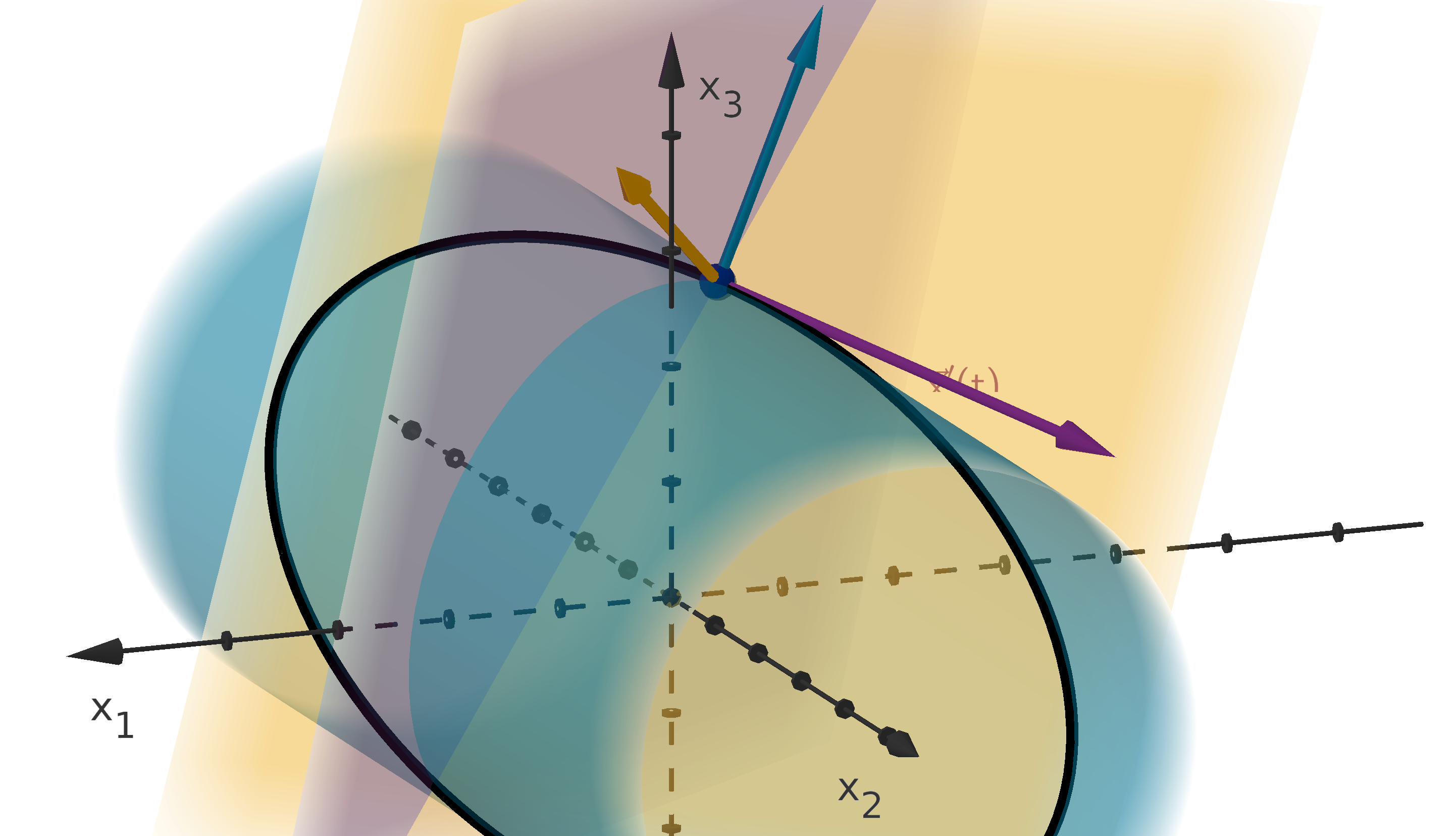

Partial Derivatives

Figure 13: The tangent line to y = f (x

1

, x

2

) in the x

1

-direction

Computing a partial derivative requires us to treat the non-changing variables as constants. Then

we can perform ordinary single-variable differentiation with the respect to the variable that is changing.

0.3.3

Computing a Partial Derivative

The profit of a firm with a Cobb-Douglas production function might be modeled by

π(L, K) = pL

α

K

β

− wL − rK.

We can compute the partial derivative π

L

(L, K), which measures the marginal effect of hiring more

labor.

Solution

Since π

L

(L, K) is a partial derivative, we can treat K as a constant. That means that neither K

β

nor

rK is changing. We treat K

β

as a constant multiple of the monomial function L

α

. We treat rK as a

constant term with derivative 0.

π

L

(L, K) = pαL

α−1

K

β

− w

22

0.3.4

Multivariable Limits and Continuity

Definition 0.15

A multivariable function f (x) is continuous at a, if

lim

x→a

f(x) = f(a)

In order to verify this limit, we must check that f(x) can be made arbitrarily close to f(a) by

restricting to a sufficiently small neighborhood of a. This neighborhood allows for travel in infinitely

many directions from a, rather than just forwards and backwards like a one-variable limit. This makes

multivariable limits difficult to compute rigorously and multivariable continuity difficult to verify directly.

Fortunately, we can use the same approach we used for single-variable functions.

Variant of Theorem 0.9

The following multivariable functions are continuous on their domains

1 Constant functions

2 Linear functions

3 Polynomials

4 Roots

5 Exponential functions

6 Logarithms

7 Trigonometric functions

23

0.3.4 Multivariable Limits and Continuity

Variant of Theorem 0.10

If f(x) and g(x) are continuous on their domains, and c is a constant, then the following are also

continuous on their domains

1 f(x) + g(x)

2 f(x) − g(x)

3 f(x)g(x)

4

f(x)

g(x)

(note that any x where g(x) = 0 is not in the domain)

5 f(x)

g(x)

as long as f(x) > 0

6 f(g(x)) where f (x) is a one-variable function

Multivariable continuity becomes important when discussing derivatives. Partial derivatives do not

use multivariable limits. They use a limit as a single variable h goes to 0. For this reason, we are not

guaranteed that partial derivatives reliably model the shape of a function.

Example

Consider the function

f(x

1

, x

2

) =

(

0 if x

2

≤ 0

x

1

if x

2

> 0

This function is 0 when x

1

= 0 or x

2

= 0. Thus the partial derivatives at (0, 0) are

f

1

(0, 0) = 0 f

2

(0, 0) = 0

If we increase x

1

while holding x

2

constant or increase x

2

while holding x

1

constant, then the function

stays constant at 0. This does not reflect the fact that if we increase both x

1

and x

2

at (0, 0), the

function will have a positive slope.

Many theorems rely on a function behaving consistently with its partial derivatives no matter which

direction we travel. The following property will usually serve that purpose.

Definition 0.16

A function f(x) is continuously differentiable, if all the partial derivative functions f

i

(x) are continuous

functions. If instead they are all continuous at a point a, we say f(x) is continuously differentiable at

a.

24

0.3.5

The Chain Rule

How do model the change of a multivariable function when more than one input variable is changing?

We write each input variable as a function of a parameter. For instance, if x

1

and x

2

are both changing

we can write each as a function of a parameter t. We can combine these to define a vector function:

x(t) = (x

1

(t), x

2

(t)).

f(x(t)) is a composition of functions. If we have defined x

1

(t) and x

2

(t) to correctly model the change

we want in x

1

and x

2

, then the derivative of f(x(t)) will tell us how f is changing as well. Notice

f(x(t)) is a single variable function. The value of t determines its value completely. The multivariable

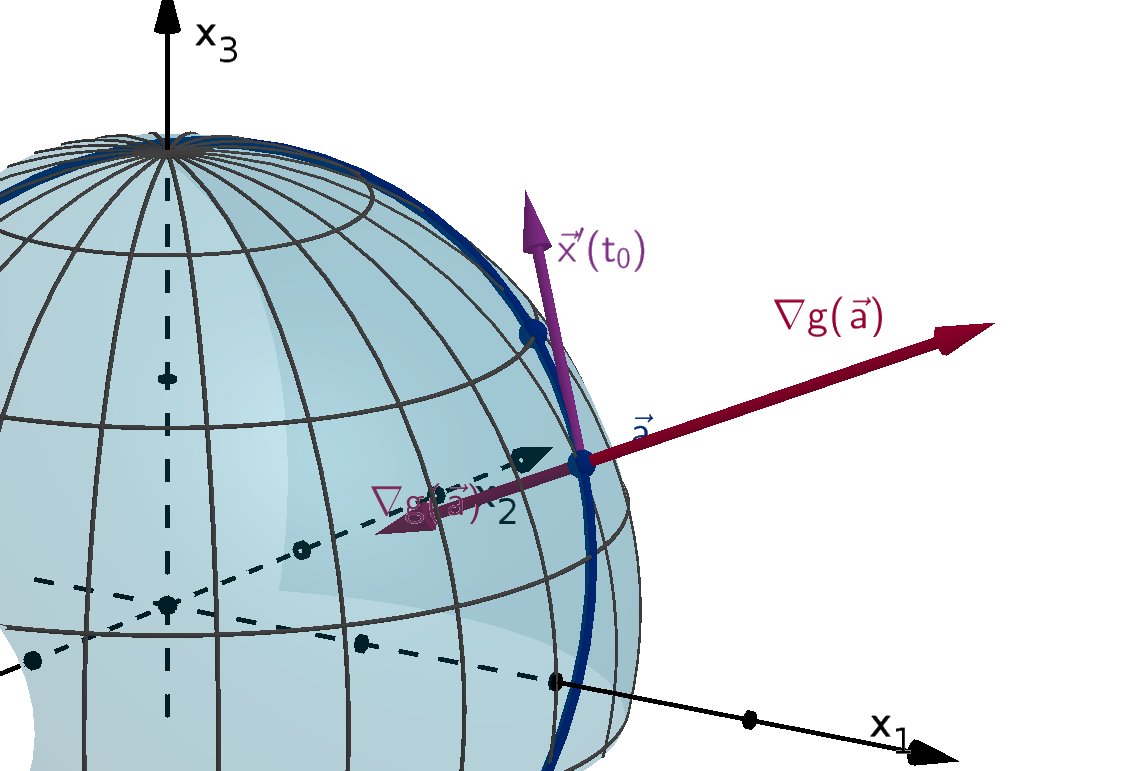

chain rule computes its derivative using x(t) and the gradient of f(x).

Definition 0.17

Given a function f(x), the gradient vector of f at x is

∇f(x) = (f

1

(x), f

2

(x), . . . , f

n

(x))

Theorem 0.18 [The Multivariable Chain Rule]

Suppose f(x) is a continuously differentiable, n-variable function. If x(t) is differentiable, then the

derivative of the composition f(x(t)) with respect to t is

df

dt

(t) = ∇f(x(t)) · x

′

(t)

or

df

dt

(t) =

n

X

i=1

f

i

(x(t))x

′

i

(t)

We should avoid using the notations f

′

(t) and f

t

(t) for derivatives of compositions. Instead, we use

Leibniz notation. This makes the variable of differentiation clear without implying that we are computing

a partial derivative.

25

0.3.6

Applying the Chain Rule

Suppose f(x

1

, x

2

) =

ln x

1

x

2

. If x

1

(t) = t

2

and x

2

(t) = e

t

, compute

d

dt

f(x

1

(t), x

2

(t)).

Solution

According to the chain rule

d

dt

f(x

1

(t), x

2

(t)) = f

x

1

(x

1

(t), x

2

(t))x

′

1

(t) + f

x

2

(x

1

(t), x

2

(t))x

′

2

(t)

=

1

x

1

(t)x

2

(t)

x

′

1

(t) −

ln x

1

x

2

2

x

′

2

(t)

=

1

t

2

e

t

2t −

ln t

2

e

2t

e

t

=

2

te

t

−

ln t

2

e

t

=

2 − t ln t

2

te

t

Remark

The multivariable chain rule is not useful for direct calculations. Substituting the expressions for x

1

(t)

and x

2

(t) would have given us

f(x

1

(t), x

2

(t)) =

ln t

2

e

t

.

We can differentiate this using single-variable methods to obtain the same answer. The multivariable

chain rule will instead serve us well in more abstract situations.

0.3.7

Alternate Notations and the Chain Rule

The multivariable chain rule is easiest to state when we give the component functions names that

match the variables of f. When this is not the case, we need to take care with our notation.

26

Example

Let f (x, y) be a continuously differentiable function. Let x and y be defined by differentiable functions

g(t) and h(t) respectively. The chain rule states that

d

dt

f(g(t), h(t)) = f

x

(g(t), h(t))g

′

(t) + f

y

(g(t), h(t))h

′

(t).

We do not write f

g

or f

h

in this example. f is defined as a function of x and y, so those are the only

partial derivatives it has.

Some applications use one of the variables of f as the parameter. The simplest example gives an

alternate formulation for the partial derivative.

Example

Let f(x

1

, x

2

) be a continuously differentiable function. Let x

1

be the identity function of itself and let

x

2

to be a constant function of x

1

.

x

1

= x

1

dx

1

dx

1

= 1

x

2

= c

dc

dx

1

= 0

The rate of change with this parameterization should reflect that x

1

is changing and x

2

is not. That is

exactly what the chain rule tells us.

d

dx

1

f(x

1

, c) = f

x

1

(x

1

, c)

dx

1

dx

1

+ f

x

2

(x

1

, c)

dc

dx

1

= f

x

1

(x

1

, c)(1) + f

x

2

(x

1

, c)(0)

= f

x

1

(x

1

, c)

We will build upon this formulation when we compute comparative statics.

Finally, we should note that the chain rule applies when the x

i

are multivariable functions of a vector

t. In this case, f(x(

t)) is a function of

t and thus we can compute its partial derivatives.

27

0.3.7 Alternate Notations and the Chain Rule

Generalization of Theorem 0.18

Suppose f(x) is a continuously differentiable, n-variable function. If x

i

(

t) are differentiable functions of

the variables t

j

, then the partial derivative of the composition f (x(

t)) with respect to t

k

is

∂f

∂t

k

(

t) = ∇f(x(

t)) · x

k

(

t)

or

∂f

∂t

k

(

t) =

n

X

i=1

f

i

(x(

t))

∂x

i

∂t

k

(

t)

This generalization follows immediately from treating each t

j

except t

k

as a constant.

0.3.8

Proving the Multivariable Chain Rule

The proof of the multivariable chain rule uses the same tools as the single variable chain rule (and

product rule). However, multivariable limits are much more difficult to verify than single variable limits.

To check that lim

x→a

f(x) = L, we have to consider values of x in every direction from a, not just forward

or backwards along a line. We will sketch the proof for the case where f is a two-variable function.

Even the sketch is quite technical. It contains no arguments that are important enough to commit to

memory.

Proof

We apply the definition of a derivative

df

dt

= lim

h→0

f(x(t + h)) − f(x(t))

h

= lim

h→0

f(x

1

(t + h), x

2

(t + h)) − f(x

1

(t), x

2

(t))

h

We break up this limit into a sum of two limits by adding and subtracting a term and regrouping the

result (assuming the limit of each summand exists)

= lim

h→0

f(x

1

(t + h), x

2

(t + h)) − f(x

1

(t), x

2

(t + h)) + f(x

1

(t), x

2

(t + h)) − f(x

1

(t), x

2

(t))

h

= lim

h→0

f(x

1

(t + h), x

2

(t + h)) − f(x

1

(t), x

2

(t + h))

h

+ lim

h→0

f(x

1

(t), x

2

(t + h)) − f(x

1

(t), x

2

(t))

h

28

Next we multiply each limit by 1, represented as a quotient of an expression divided by itself.

= lim

h→0

f(x

1

(t + h), x

2

(t + h)) − f(x

1

(t), x

2

(t + h))

h

x

1

(t + h) − x

1

(t)

x

1

(t + h) − x

1

(t)

+ lim

h→0

f(x

1

(t), x

2

(t + h)) − f(x

1

(t), x

2

(t))

h

x

2

(t + h) − x

2

(t)

x

2

(t + h) − x

2

(t)

Naturally

x

i

(t+h)−x

i

(t)

x

i

(t+h)−x

i

(t)

evaluates to

0

0

when h = 0. This is not a problem for a limit. However, if it

also evaluates to

0

0

at other values of h, no matter how small a neighborhood of h = 0 we choose,

then another approach is needed. In this case, the entire term will limit to 0, but we omit the formal

argument from this sketch. Instead we assume all is well, and we reorganize each product by swapping

denominators.

= lim

h→0

f(x

1

(t + h), x

2

(t + h)) − f(x

1

(t), x

2

(t + h))

x

1

(t + h) − x

1

(t)

x

1

(t + h) − x

1

(t)

h

+ lim

h→0

f(x

1

(t), x

2

(t + h)) − f(x

1

(t), x

2

(t))

x

2

(t + h) − x

2

(t)

x

2

(t + h) − x

2

(t)

h

We break up the result as a product of limits (assuming the limit of each factor exists)

= lim

h→0

f(x

1

(t + h), x

2

(t + h)) − f(x

1

(t), x

2

(t + h))

x

1

(t + h) − x

1

(t)

lim

h→0

x

1

(t + h) − x

1

(t)

h

+ lim

h→0

f(x

1

(t), x

2

(t + h)) − f(x

1

(t), x

2

(t))

x

2

(t + h) − x

2

(t)

lim

h→0

x

2

(t + h) − x

2

(t)

h

The second factor of each product now looks like a derivative. To make first factors look more like

derivatives, we let j = x

1

(t + h) −x

1

(t) and k = x

2

(t + h) −x

2

(t). These quantities go to 0 as h → 0.

Our limits can be rewritten as

= lim

(h,j)→(0,0)

f(x

1

(t) + j, x

2

(t + h)) − f(x

1

(t), x

2

(t + h))

j

lim

h→0

x

1

(t + h) − x

1

(t)

h

+ lim

(h,k)→(0,0)

f(x

1

(t), x

2

(t) + k) − f(x

1

(t), x

2

(t))

k

lim

h→0

x

2

(t + h) − x

2

(t)

h

At this point we have four limits, each of which looks like the definition of a derivative. We can

replace each one with its derivative notation. In general, we cannot evaluate a multivariable limit by

handling one variable at a time, but the fact that the partial derivatives are continuous allows us to do

so here. The details of this kind of argument are covered in an analysis course.

= lim

h→0

f

1

(x

1

(t), x

2

(t + h))x

′

1

(t) + lim

h→0

f

2

(x

1

(t), x

2

(t))x

′

2

(t)

= f

1

(x

1

(t), x

2

(t))x

′

1

(t) + f

2

(x

1

(t), x

2

(t))x

′

2

(t)

This is the dot product

= ∇f(x(t)) · x

′

(t)

One can adapt this proof to a higher dimension by breaking the limit into more summands. The

theorem we gave is even more general, because applies to an n-variable function. To prove that version,

we would use a proof by induction.

29

0.3.8 Proving the Multivariable Chain Rule

30

Chapter 1

Unconstrained Optimization

1.1

Single-Variable Optimization

Goals:

1 Know the definition of a local or global maximizer.

2 Apply the first- and second-order conditions to calculate maximizers.

3 Distinguish between necessary and sufficient conditions and recognize the role of each in optimiza-

tion.

4 Understand the role of the derivative in proving the first- and second-order conditions.

Some of the most important methods in calculus are those that identify maximizers and minimizers

of functions. This section gives precise theorems to describe those methods. We will also examine

the distinct but complementary roles played by necessary conditions and sufficient conditions. Finally,

we will give a reasonably compact formal basis to prove the theorems of this section and support the

theorems in the sections that follow.

1.1.1

The First-Order Condition

Given a function, we are interested in what inputs of that function will produce the largest or

smallest values of that function. These inputs are called maximizers and minimizers. In order to identify

maximizer and minimizers, we need to have a rigorous definition that we can verify.

Definition 1.1

Suppose a lies in the domain of a function f(x).

1 a is a maximizer of f if f(a) ≥ f(x) for all other x in the domain of f. In this case f(a) is called

the maximum of f.

2 a is a local maximizer of f if f(a) ≥ f(x) for all other x in some neighborhood of a. It is a

strict local maximizer if f (a) > f(x) instead. In either case f(a) is called a local maximum of

f.

3 a is a minimizer of f if f(a) ≤ f(x) for all other x in the domain of f. In this case f(a) is called

the minimum of f.

4 a is a local minimizer of f if f(a) ≤ f (x) for all other x in some neighborhood of a. It is a

strict local minimizer if f(a) < f(x) (than 0) to the left of a and smaller values (than 0) to the

right. Thus, travelinstead. In either case f (a) is called a local minimum of f .

32

Remark

When we are trying to draw a contrast with a local maximizer, sometimes we use global maximizer or

absolute maximizer to refer to a maximizer of a function.

The difficulty in finding maximizers is that the domain has infinitely many points. Using only the

definition, we would need to evaluate them all, one by one, to find a maximizer. This is obviously

impossible. Thankfully, calculus gives us a way to narrow down the search.

Derivatives measure the rate of change of a function. Knowing whether the rate is positive or negative

should tell us how f(a) compares to nearby values. Here is a way to formally state that relationship.

Lemma 1.2

If f

′

(a) > 0, then for all x in some neighborhood of a,

1 f(x) > f(a) if x > a

2 f(x) < f(a) if x < a.

Figure 1.1: The graph y = f (x) and a neighborhood where it stays close to its tangent line

Remark

A lemma is a statement that is not particularly interesting on its own. It is used as a step to proving a

more important result.

We will provide a formal proof for this lemma later. The main argument is that near a, y = f(x)

lies close enough to the tangent line at a to mimic its behavior, attaining larger values for x > a and

33

1.1.1 The First-Order Condition

smaller values for x < a. Notice that this behavior does not need to persist for all x. We cannot say

how long y = f(x) will stay close to its tangent line. It may be that the neighborhood where it does is

quite small.

We can make the same argument for functions with a negative derivative, except the behavior is

backwards. Aside from switching the direction of some inequalities, the proof is identical. Rather than

treat this result as its own lemma, we present it as a variant.

Variant of Lemma 1.2

If f

′

(a) < 0, then for all x in some neighborhood of a,

1 f(x) < f(a) if x > a

2 f(x) > f(a) if x < a.

The existence of nearby x such that f(x) > f (a) is inconsistent with the definition of a local

maximizer. Lemma 1.2 and its variant guarantee such x, so:

If f

′

(a) > 0, then a is not a local maximizer.

If f

′

(a) < 0, then a is not a local maximizer.

We convert these statements to their contrapositives. If a is a local maximizer, then f

′

(a) is neither

positive nor negative. This gives us the following condition.

Theorem 1.3 [The First-Order Condition (FOC)]

Let a be a local maximizer or local minimizer of f(x). Either f

′

(a) does not exist or f

′

(a) = 0.

Definition 1.4

The values of x that satisfy the first-order condition are called critical points.

34

1.1.2

Applying the First-Order Condition

What does the first-order condition tell us about f (x) = 8x

3

− x

4

?

Solution

The first-order condition tells us that a local maximizer or minimizer only occurs where the derivative

is 0 or undefined. The derivative of this function is 24x

2

− 4x

3

. It is defined for all x, so we solve for

where it is 0.

f

′

(x) = 0

24x

2

− 4x

3

= 0

4x

2

(6 − x) = 0

x = 0 or x = 6

This means that no point except x = 0 or x = 6 can be a local maximizer or minimizer.

−4 −2

2 4 6 8 10

−200

200

400

600

y = f(x)

x

y

Figure 1.2: The graph of f(x) = 8x

3

− x

4

Remark

The first-order condition does not tell us that either of x = 0 or x = 6 must a local maximizer or a local

minimizer. In fact, x = 6 is a local maximizer but x = 0 is neither.

35

1.1.3

The Second-Order Condition

As the previous example shows, the first-order condition is limited in its conclusion. Knowing the

value of f

′

(a) is not enough to give us certainty about the shape of the graph near a. For that we need

to know how the first derivative is changing at a. The change in the first derivative is measured by

the second derivative. The second derivative function, denoted f

′′

(x), is the derivative of the function

f

′

(x). The sign of the second derivative allows us to classify some critical points.

Theorem 1.5 [The Second-Order Condition (SOC)]

If f

′

(a) = 0 and f

′′

(a) < 0, then a is a strict local maximizer of f.

−2 −1

1 2 3 4 5

−10

10

20

y = f(x)

y = f

′

(x)

(2, f(2))

y = f(x)

x

y

Figure 1.3: A neighborhood where a = 2 is the maximizer of f (x)

The intuition behind this result relies on the fact that f

′′

(a) is the derivative of f

′

(x) at a. If

f

′′

(a) < 0, then f

′

(a) takes on larger values (than 0) to the left of a and smaller values (than 0) to the

right. Traveling left to right, the function increases until it reaches a. After passing a, it decreases.

Naturally, we have the following variant.

Variant of Theorem 1.5

If f

′

(a) = 0 and f

′′

(a) > 0, then a is a strict local minimizer of f.

Remark

This is sometimes called the local second-order condition, since it gives information about local

maximizers.

We cannot conclude anything, if f

′′

(a) = 0. a may be a maximizer, a minimizer or neither.

36

1.1.4

Applying the Second-Order Condition

What does the second-order condition tell us about f (x) = 8x

3

− x

4

?

Solution

The second-order condition requires f

′

(x) = 0. We’ve already shown that this only occurs at x = 0 and

x = 6. The other part of the condition requires us to compute the second derivative.

f

′

(x) = 24x

2

− 4x

3

f

′′

(x) = 48x − 12x

2

f

′′

(0) = 0

f

′′

(6) = −144 < 0

This means that x = 6 is strict local maximizer. The second-order condition does not tell us anything

about x = 0.

1.1.5

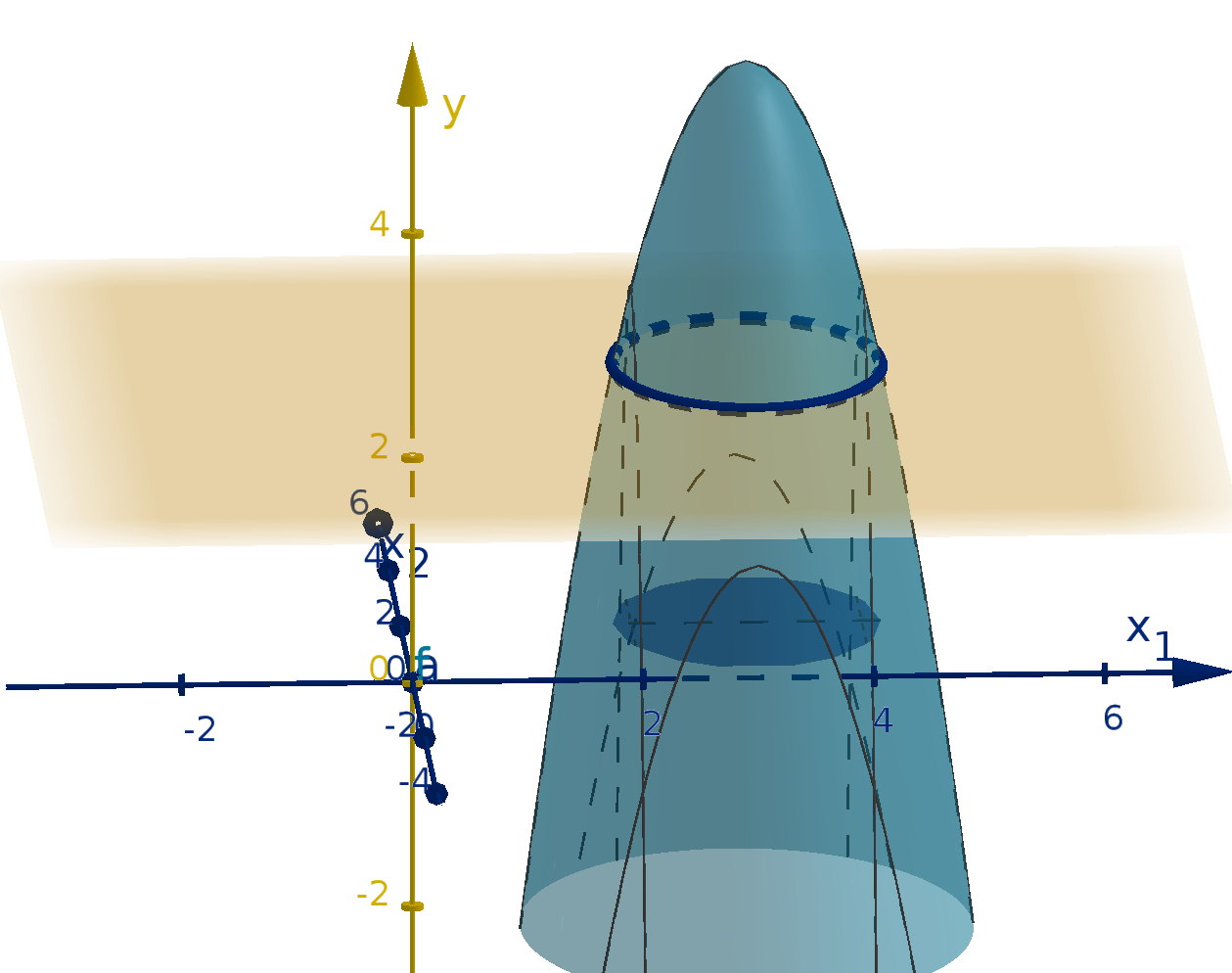

The Global Second-Order Condition

A firm seeking to increase its profits does not have much use for a local maximizer. The excuse:

“Our strategy was superior to all numerically similar strategies” will not impress a board of directors.

Nor would any rational actor settle for a mere local maximizer of their utility function. Utility maximizers

and profit maximizers want to find the global maximizer. If we know more about the second derivative

of the utility function, we can identify such a value.

Theorem 1.6 [The Global Second-Order Condition (GSOC)]

If f

′

(x

∗

) = 0 and f

′′

(x) < 0 for all x, then x

∗

is the only critical point of f and is the unique global

maximizer of f.

37

1.1.5 The Global Second-Order Condition

Remark

Unlike the (local) second-order condition, this theorem requires that the second derivative is

negative everywhere, not just at x

∗

.

In return for a stronger requirement, we obtain a much stronger conclusion.

Economists traditionally use x

∗

to denote a global maximizer. Thus we will use x

∗

to denote a

known maximizer or any point that will imminently be identified as a maximizer.

−2

2 4

−2

−1

1

2

3

(2, f(2))

y = f(x)

y = f

′

(x)

x

y

Figure 1.4: A function and its derivative near a maximizer

Unlike in the local case, we can make some use of a zero second derivative here.

Variants of Theorem 1.6

1 If f

′

(x

∗

) = 0 and f

′′

(x) > 0 for all x, then x

∗

is the only critical point of f and is the global

minimizer of f.

2 If f

′

(x

∗

) = 0 and f

′′

(x) ≤ 0 for all x, then x

∗

is a global maximizer of f (not necessarily unique).

3 If f

′

(x

∗

) = 0 and f

′′

(x) ≥ 0 for all x, then x

∗

is a global minimizer of f (not necessarily unique).

38

1.1.6

Sufficient and Necessary Conditions

The conclusions we draw from the first-order condition are fundamentally different from the con-

clusions that we draw from the second-order conditions. Neither of them allows us to fully answer the

question of which points are local maximizers of f . We can see the difference in their structure

FOC: If a is a local maximizer, then [condition]

SOC: If [condition], then a is a local maximizer

Mathematics has the following vocabulary for describing this difference.

Definition 1.7

Suppose we have a condition P that we are using to detect whether a statement Q is true or false.

1 A statement of the form “If Q then P ” indicates that P is a necessary condition for Q.

2 A statement of the form “If P then Q” indicates that P is a sufficient condition for Q.

Remark

We can find uses for both necessary and sufficient conditions, but we need to be careful to interpret

their conclusions correctly.

If you want to show that Q is true, you need to use a sufficient condition. A necessary condition

will not suffice.

If you want to rule out Q being true, one way is to show that a necessary condition is not satisfied.

Going forward, it is important to identify each new result as being necessary or sufficient (or maybe

both). We can begin by seeing how these terms apply to the first- and second-order conditions.

The first-order condition is a necessary condition. You can not have a local maximizer without

satisfying it. It is not a sufficient condition. A point can satisfy the first-order condition without being

a local maximizer.

Example

Let f(x) = x

3

. The value a = 0 satisfies the FOC but is not a local maximizer (or minimizer).

39

1.1.6

Sufficient and Necessary Conditions

This means that the first-order condition can only rule out values that are not maximizers. We

cannot conclude that a point is a maximizer just by showing that it satisfies the first-order condition.

The second-order condition is a sufficient condition. If f

′

(a) = 0 and f

′′

(a) < 0 then a must be a

local maximizer. It is not a necessary condition: there may be local maximizers that do not satisfy the

second-order condition.

Example

Let f(x) = −x

4

. The local maximizer a = 0 does not satisfy the SOC, because f

′′

(0) = 0.

The global second-order condition is also a sufficient condition and not a necessary one. For example,

a function can have a global maximizer without satisfying it.

−2

2 4

−1

1

2

3

(1, f(1))

y = f(x)

x

y

Figure 1.5: The graph of f(x) =

2

x

2

−2x+2

, which has a global maximizer and unique critical point at

x

∗

= 1, but does not satisfy the GSOC.

Remark

Abstractly, there is no difference between P and Q. There are two ways to view the statement:

If P , then Q.

P is a sufficient condition for Q.

Q is a necessary condition for P

However, we like to think of conditions as statements that we use to test for what we really care about.

So while, abstractly, being a local maximizer is a sufficient condition for f

′

(a) = 0, this does not reflect

the way we use the first-order condition in practice.

Generally we will want to have both necessary and conditions to test for properties that we care about.

With the tools we have so far, we can determine that certain points are local or global maximizers. We

40

can determine that many points are not local maximizers. Still, there may be points that satisfy the first-

order condition but not the second-order condition. We cannot tell whether these are local maximizers

or not.

The best condition would be a condition that is both necessary and sufficient. No such tool exists for

general optimization, so we are on the lookout for additional conditions to apply when the ones we have

are inconclusive. Coming up with new conditions is usually hard work, but we can obtain one easily, if

we exploit the relationship between maximizers and minimizers.

A sufficient condition for a minimizer can be turned into a necessary condition for a maximizer. If

f

′

(a) = 0 and f

′′

(a) > 0 then by a variant of Theorem 1.5, a is a strict local minimizer. Thus it cannot

be a maximizer. On the other hand, if f

′′

(a) exists but f

′

(a) = 0 then a is still not a local maximizer.

This leaves only the following possibilities for the second derivative at a local maximizer a.

Theorem 1.8

If a is a local maximizer of f, then f

′′

(a) ≤ 0 or f

′′

(a) does not exist.

1.1.7

Proving the First-Order Condition

In general, we expect a positive derivative to mean that greater values of x produce greater values

of f(x). The lemma at the heart of the first-order condition described what we can conclude when a

derivative is positive at one point.

The derivative is a limit, so any formal argument needs to start there. Proofs about limits can require

extensive computations and creative problem solving. Fortunately, we will only need the following lemma

about limits.

Lemma 1.9

If lim

x→a

f(x) = L and L > 0, then there is a neighborhood of a on which f is positive.

Proof

Since L is positive, L/2 is also positive. By definition of a limit there is some neighborhood of a in

which f(x) is within L/2 of L. We can express that distance with an absolute value.

|f(x) − L| < L/2

−L/2 < f(x) − L < L/2

L/2 < f(x) < 3L/2

Since L/2 is positive, so is f(x).

41

1.1.7 Proving the First-Order Condition

Variant of Lemma 1.9

If lim

x→a

f(x) = L and L < 0, then there is a neighborhood of a on which f is negative.

We are now in a position to prove Lemma 1.2.

Lemma 1.2

If f

′

(a) > 0, then for all x in some neighborhood of a,

1 f(x) > f(a) if x > a

2 f(x) < f(a) if x < a.

Proof

Since f

′

(a) = lim

h→0

f(a + h) − f (a)

h

> 0, Lemma 1.9 guarantees that there is a neighborhood of h

values near 0 where

f(a+h)−f (a)

h

> 0. Let x = a + h. In the corresponding neighborhood of x values

around a, we can make the following computations.

1 If x > a then h > 0 and

f(a + h) − f (a)

h

> 0

f(x) − f(a)

h

> 0

f(x) − f(a) > 0

f(x) > f(a)

2 If x < a then h < 0 and

f(a + h) − f (a)

h

> 0

f(x) − f(a)

h

> 0

f(x) − f(a) < 0

f(x) < f(a)

42

Remark

This proof uses an earlier lemma. If you are carefully reading this proof to understand the argument,

you may need to look back at the lemma and think about how it is being used here.

Visually, the argument of this proof is that the secant lines from a have positive slope in some

neighborhood of a.

1.1.8

Proving the Second-Order Conditions

In order to prove the second-order condition, we need a stronger conclusion than Lemma 1.2. Calculus

teaches that a function with positive derivatives is increasing, while a function with negative derivatives

is decreasing. In order to make a rigorous argument, we should know formal definitions of increasing

and decreasing.

Definition 1.10

Let f(x) be a function, and let I be an interval in its domain.

f(x) is increasing if for any numbers a < b in the domain of f , f(a) < f(b).

f(x) is decreasing if for any numbers a < b in the domain of f , f(a) > f(b).

43

1.1.8 Proving the Second-Order Conditions

Figure 1.6: The graph of an increasing function

f

′

(a) > 0 is not enough to guarantee that f (x) increases in a neighborhood of a. f(x) may suffer

from oscillations that persist arbitrarily close to a (see Figure 1.1). If we want to use a derivative to show

that a function is increasing, we need to know the derivative is positive at every point on an interval.

Lemma 1.11

If f

′

(x) > 0 for all x on an interval I, then f (x) is increasing on I.

To prove this lemma, we need to show that when f

′

(x) > 0, the function satisfies the formal

definition of increasing that we saw above. This is surprisingly arduous to prove using the definition of

a derivative as our starting point. Here are two deceptively short proofs

Proof

Suppose a < b. Apply the fundamental theorem of calculus: f (b)−f (a) =

Z

b

a

f

′

(x) dx. The integrand

is positive over all of [a, b], so the integral is positive too. Thus f (b) > f(a).

44

Figure 1.7: The fundamental theorem of calculus applied to a positive derivative

Proof

Suppose a < b. Apply the mean value theorem: There is some c between a and b such that

f(b)−f (a)

b−a

=

f

′

(c). Since c ∈ I, we know that f

′

(c) > 0. Thus f(b) > f(a).

Figure 1.8: The point c, whose tangent line has the same slope as the secant from a to b

You should not be satisfied by these proofs. Each raises a new question.

1 How do you prove the fundamental theorem of calculus?

2 How do you prove the mean value theorem?

A satisfactory argument would either prove the missing result or find another method that eliminates

the need for them. Unfortunately, any of these approaches takes us into concepts beyond the scope of

these notes. You can expect to find their formal proofs in an analysis course.

Naturally, this lemma has variants. We can switch the direction of the inequality, but we can also

relax the strictness of the inequality.

45

1.1.8 Proving the Second-Order Conditions

Variants of Lemma 1.11

1 If f

′

(x) < 0 on an interval I, then f (x) is decreasing on I.

2 If f

′

(x) ≥ 0 on an interval I, then f(x) is non-decreasing on I, meaning that if a < b,

f(a) ≤ f(b).

3 If f

′

(x) ≤ 0 on an interval I, then f (x) is non-increasing on I, meaning that if a < b, f(a) ≥

f(b).

Moving forward, we will see strict and non-strict variants so frequently that when we don’t, it is

worth pondering why not.

With these lemmas in hand, we are ready to prove the second-order condition.

Theorem 1.5

If f

′

(a) = 0 and f

′′

(a) < 0, then a is a strict local maximizer of f.

Arguments about the second derivative usually rely on the fact that f

′′

(x) is the derivative of f

′

(x).

We will apply Lemma 1.9, letting f

′

(x) play the role of the original function with f

′′

(x) as its derivative.

We can thus use the sign of f

′′

(a) to compare the values of f

′

(x) and f

′

(a). Here is the formal

argument.

Proof

We suppose f

′

(a) = 0 and f

′′

(a) < 0. f

′′

(x) is the derivative of f

′

(x), and it is negative at a. A

variant of Lemma 1.9 applies. There is some neighborhood I of a where

if x > a, then f

′

(x) < f

′

(a) = 0

if x < a, then f

′

(x) > f

′

(a) = 0.

The second inequality shows, by Lemma 1.11, that f(x) is increasing to the left of x in I. By definition

of increasing this means that f(x) < f(a) for all x < a in I. Similarly, the first inequality shows that

f(x) is decreasing to the right of a in I. By definition of decreasing this means that f(x) < f(a) for

all x > a in I. Thus f(a) > f(x) for all other x in I. This satisfies the definition of a strict local

maximizer, so we conclude that a is a strict local maximizer of f .

The proof of the global second-order condition follows the same logic. The only difference is that

we can apply Lemma 1.11 instead of Lemma 1.9 to get a global statement about the first derivative on

either side of x

∗

. Here is the full text for the sake of completeness.

46

Proof

We suppose f

′

(x

∗

) = 0 and f

′′

(x) < 0 for all x. A variant of Lemma 1.11 tells us that f

′

(x) is

decreasing for all x. This means:

if x > x

∗

, then f

′

(x) < f

′

(x

∗

) = 0

if x < x

∗

, then f

′

(x) > f

′

(x

∗

) = 0.

The second inequality shows, by Lemma 1.11, that f(x) is increasing for all x < x

∗

. By definition of

increasing this means that f (x) < f (x

∗

) for all x < x

∗

. Similarly, the first inequality shows that f(x)

is decreasing for all x > x

∗

. By definition of decreasing this means that f(x) < f(x

∗

) for all x > x

∗

.

Thus f(x

∗

) > f (x) for all other x. This satisfies the definition of a unique maximizer, so we conclude

that x

∗

is a unique maximizer of f.

To show that x

∗

is the only critical point, we simply note that if there were another critical point,

it would also satisfy this condition and be the unique maximizer. Since there can be only one unique

maximizer, no such critical point can exist.

1.1.9

Section Summary

The most important definitions and results from this section were

The first-order condition (Theorem 1.3)

The second-order condition (Theorem 1.5)

The global second-order condition (Theorem 1.6)

Necessary and sufficient conditions (Definition 1.7)

f

′′

(x

∗

) < 0

and f

′

(x

∗

) = 0

f

′′

(x) < 0 for all x

and f

′

(x

∗

) = 0

x

∗

is a

strict local max

x

∗

is the

unique global max and

only critical point

f

′

(x

∗

) = 0 or DNEf

′′

(x

∗

) ≤ 0 or DNE

SOC

GSOC

FOCThm 1.8

Figure 1.9: Relationships between the conditions of single variable optimization

47

1.2

Concavity

Goals:

1 Recognize convex sets.

2 Recognize concave and convex functions visually.

3 Understand the difference between strict and non-strict convexity/concavity.

4 Verify concavity using inequalities, tangent lines or second derivatives as appropriate.

5 Use concavity to find maximizers of a function.

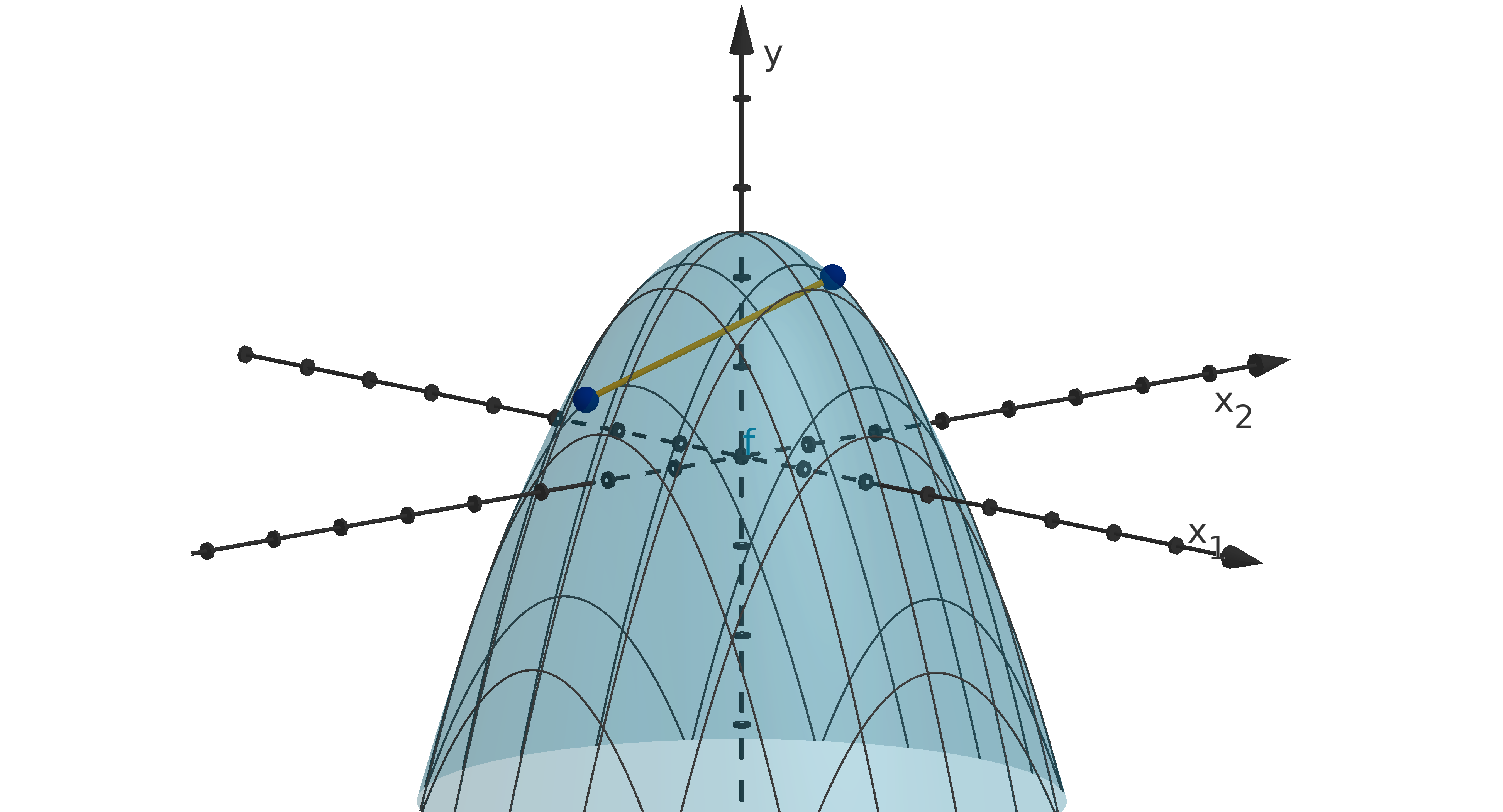

1.2.1

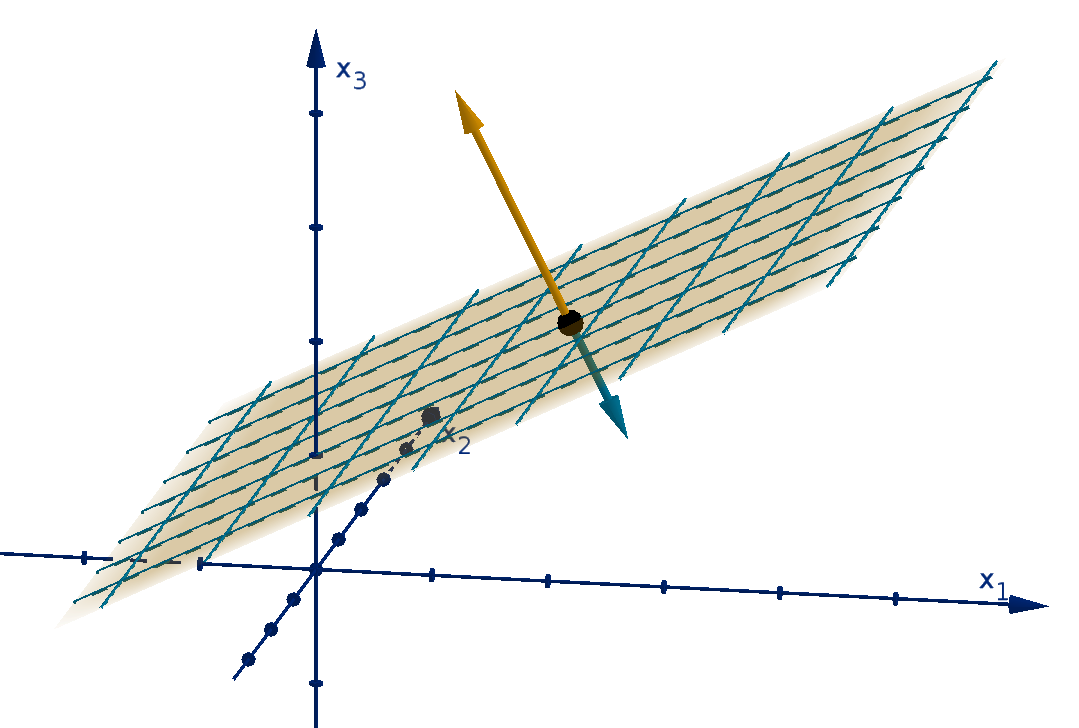

Convex Sets

Our goal is to understand what the shape of a graph tells us about the maximizer(s) of a function.

We would prefer methods that apply to both single-variable and multivariable functions. For this, we

need to be able to describe shapes in any dimension. Most students learn how to recognize a convex

polygon, but we can define convexity in a large variety of shapes. Convex regions can be angular or

smooth. They can exist in any dimension. To see this, we need the formal definition of convexity.

Definition 1.12

A region D ⊆ R

n

is convex, if the line segment between any two points a and

b in D entirely lies in

D.

There is no restriction on the dimension of D. We can even apply it to regions in the real line.

Example

If D is a subset of R

1

, then D is convex if and only if it is connected.

48

For a polygon, this definition should match our previous intuition of convexity. Convince yourself

that any two points in the convex polygons you learned about can be connected with a segment. In a

nonconvex polygon there is at least one pair of points whose line segment leaves the polygon. You can

convince yourself by picking your favorite nonconvex polygon and finding such a segment.

a

b

a

b

Figure 1.10: A convex polygon and a nonconvex polygon

We cannot make a rigorous case for convexity by drawing segments. There are too many to check.

We can instead make convexity more algebraic by parameterizing the line segment from a to

b.

x(t) = (1 − t)a + t

b 0 ≤ t ≤ 1

You can check that this is a line by writing it as

x(t) = a + t(

b −a) 0 ≤ t ≤ 1

x(0) = a. As t increases, x(t) travels in the direction of (

b −a) until arriving at

b when t = 1. To

check for convexity of D, we just need to test that the points of x(t) lie in D.

Figure 1.11: The line segment from a to

b

Furthermore, we do not need to check every a and

b. Any a and

b whose segment leaves D will

leave the at some boundary point c and reenter at some boundary point

d (Figure 1.12). Thus if we

49

1.2.1

Convex Sets

only checked segments between boundary points, the segment between c and

d would be sufficient to

indicate that D is not convex. We summarize this argument in the following theorem.

a

b

c

d

Figure 1.12: A segment that leaves a nonconvex region

Theorem 1.13

A region D is convex if for all a and

b in the boundary of D and all t in [0, 1],

(1 − t)a + t

b ∈ D

We visually identify convex polygons as polygons where every corner “points outward.” However,

the corners of the polygon are the only places that point outward. The rest of the boundary is flat,

pointing neither inward nor outward. With non-polygonal regions, it is possible to have a boundary that

points outward everywhere, not just at a few corners. This will be a useful property to keep track of, so

we have a name for such regions.

Definition 1.14

A region D is strictly convex, if the segment, not including the endpoints, between any two points a

and

b in D entirely lies in the interior of D.

Figure 1.13: Two convex regions, one strictly convex and the other not strictly convex

50

Remark

Every strictly convex region is also convex, but some convex regions are not strictly convex. We say

that strict convexity is a stronger condition and convexity is a weaker condition.

We are often interested in the intersection of two regions. For instance, if one region is the set of

points satisfying one condition, while a second region is the set of points satisfying a second conditions,

then their intersection is the points satisfying both conditions. Convexity behaves well in these situations.

Theorem 1.15

If D

1

and D

2

are convex regions, then D

1

∩ D

2

is convex.

The proof is a good exercise.

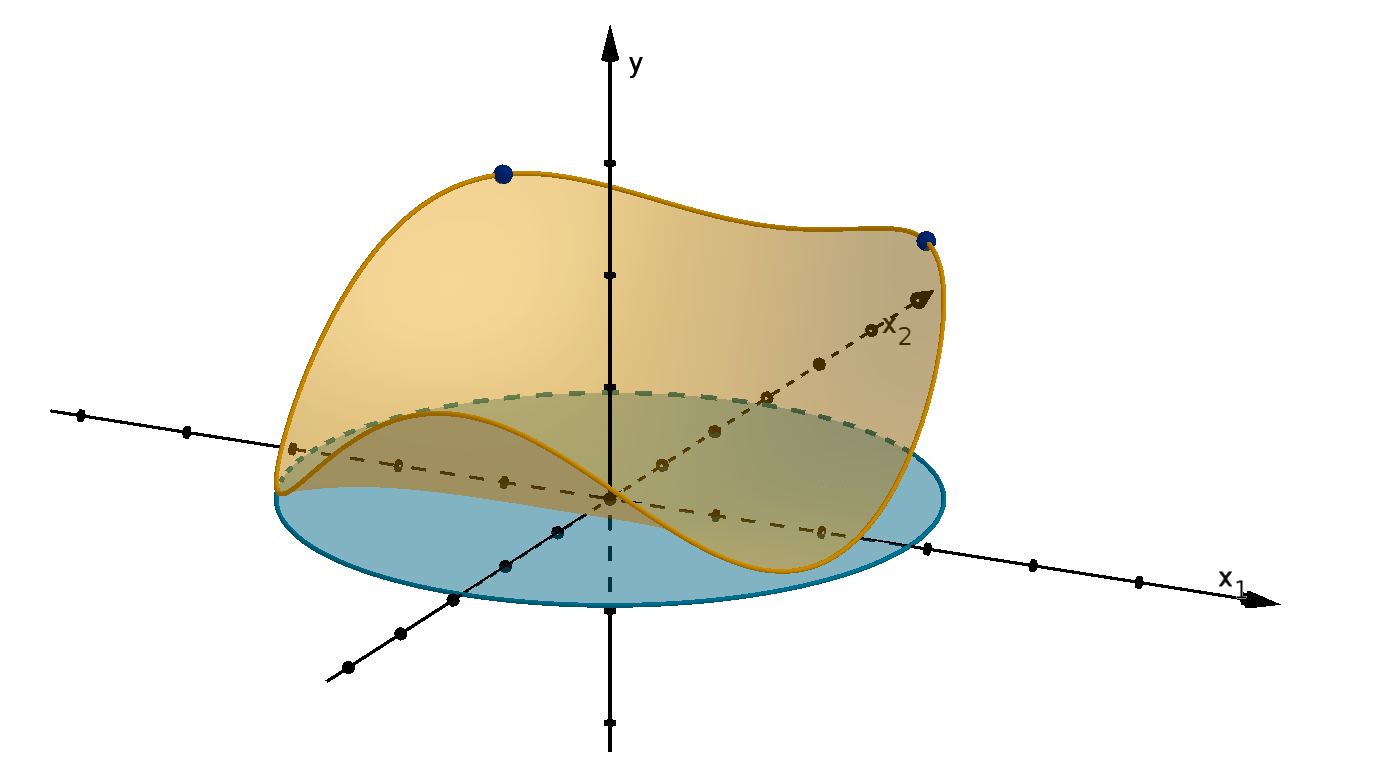

1.2.2

Concave Functions

Our next step is to use convexity to describe the shape of functions or, more precisely, their graphs.

Most students encounter convex and concave functions in calculus, though they are sometimes called

concave up and concave down. With a rigorous definition of a convex region, we are now in position to

define what it means to be a convex function.

Definition 1.16

Let f(x) be a function whose domain is convex. We say f(x) is convex, if y ≥ f(x), the region above

its graph, is convex. It is concave if y ≤ f(x), the region below its graph, is convex.

51

1.2.2

Concave Functions

Figure 1.14: A concave function

Remark

Be careful when learning these definitions. Students often expect the definition of concave function to

have something to do with a region being nonconvex (which is sometimes called concave), but it does

not. Nonconvex regions above and below graphs are unremarkable. It is when one of regions is convex

that the graph is special.

Figure 1.15: The graph of a function that is neither concave nor convex and the nonconvex regions

above and below it.

We might ask whether the region above or below y = f (x) is strictly convex, rather than merely

convex. If it is, we can pass the “strictness” onto our description of f.

Definition 1.17

Let f(x) be a function whose domain is convex. f (x) is strictly convex, if the region y ≥ f(x) is

strictly convex. It is strictly concave if the region y ≤ f(x) is strictly convex.

52

Remark

Strict concavity is a stronger condition than concavity. Every strictly concave function is also concave,

but some concave functions are not strictly concave.

Figure 1.16: A function that is concave but not strictly concave

Notice that the region below y = f(x) is a mirror image of the region above y = −f(x). If one is

convex, so is the other. We can make the following connection between a function and its negative.

Lemma 1.18

Let f(x) be a function.

1 f(x) is concave if and only if −f (x) is convex.

2 f(x) is strictly concave if and only if −f (x) is strictly convex.

We will use this lemma as an excuse to ignore convex functions. Any argument about concave

functions becomes an argument about convex functions by introducing a negative sign.

53

1.2.3

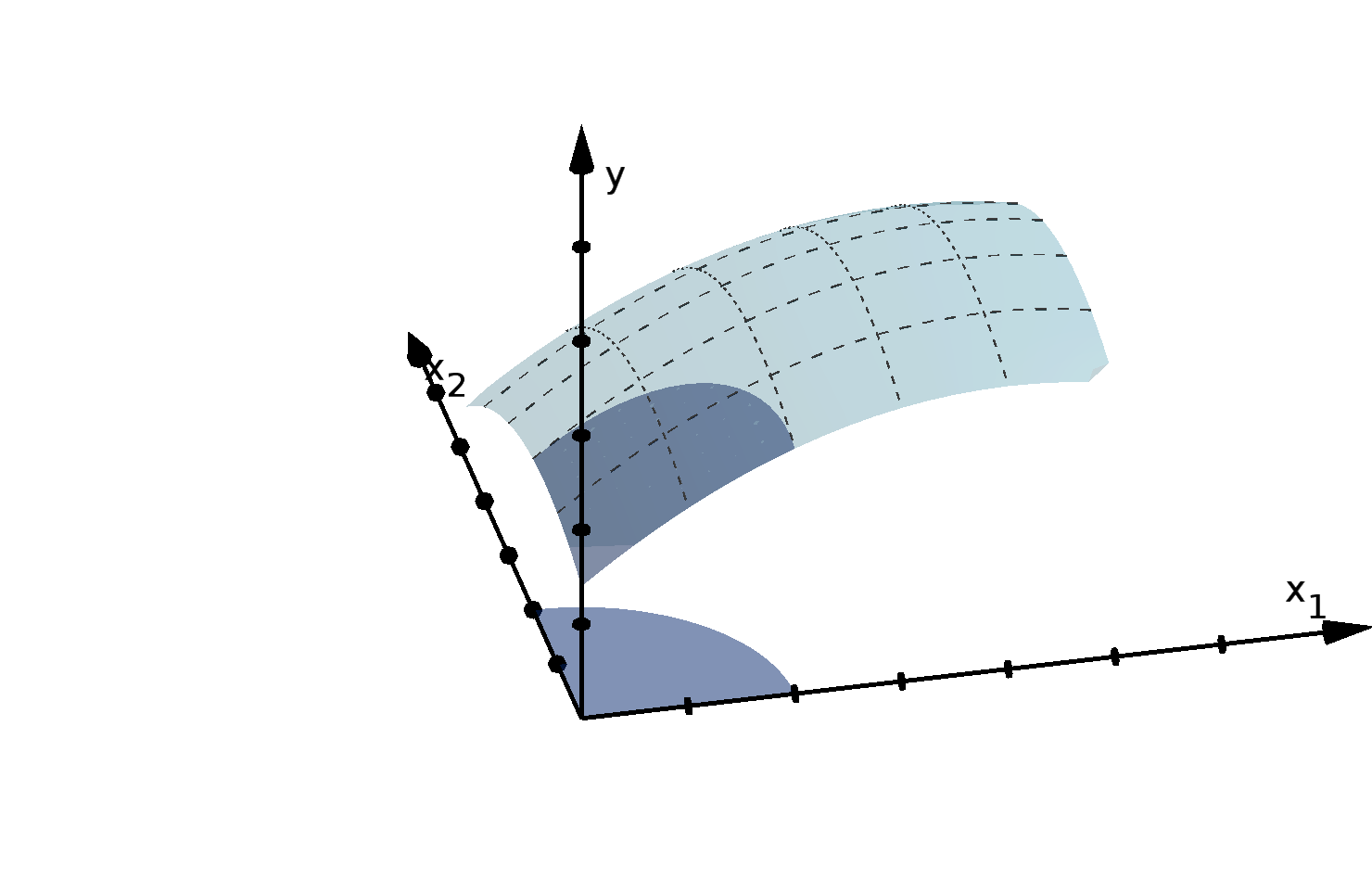

The Inequality Test for Concavity

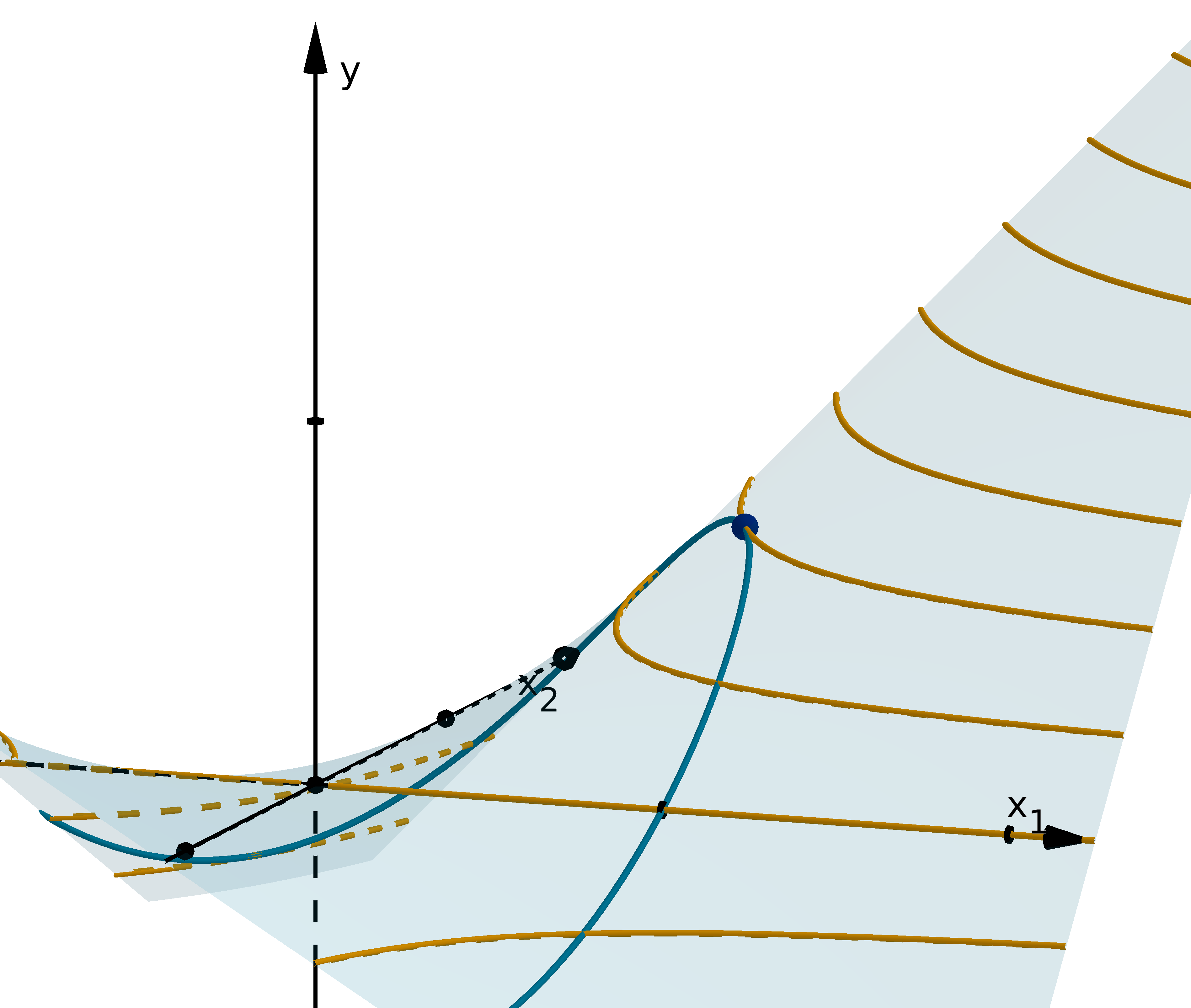

We verify the convexity of the region below y = f (x) by checking line segments between points on

the boundary. The boundary in this case is the graph itself. The line segments are called secants.

Figure 1.17: A secant below the graph of y = 5 − x

2

1

− x

2

2

We take two general points (a, f(a)) and (

b, f(

b)) on y = f(x). We parametrize the secant between

them.

x(t) =(1 − t)a + t

b

y(t) =(1 − t)f(a) + tf(

b)

0 ≤ t ≤ 1

We can write an inequality to express the condition that secant lies below the graph.

Figure 1.18: A secant below the graph of a single-variable function

54

Theorem 1.19

A function is concave, if and only if for all a and

b in its domain and any 0 ≤ t ≤ 1 we have

(1 − t)f (a) + tf(

b)

| {z }

height of secant

≤ f((1 − t)a + t

b)

| {z }

height of y=f (x(t))

0 ≤ t ≤ 1

Remarks

This is an “if and only if” condition. That means it is both necessary and sufficient for establishing

concavity.

Only a function with a convex domain can meet this condition. Otherwise we cannot always

evaluate f((1 − t)a + t

b), because (1 − t)a + t

b may lie outside the domain.

The theorem follows directly from the definitions we have developed so far. Here is a representation

of our reasoning.

(1 − t)f(a) + tf(

b) ≤ f((1 − t)a + t

b)

for all a,

b and t

The secant between any a and

b

lies under the graph y = f(x)

The region below y = f(x) is convex

f is concave

Applying the same reasoning to the definitions of convexity and strict concavity/convexity gives the

following variants. Note that we cannot demand a strict inequality at t = 0 or t = 1 because the secant

will always intersect the graph y = f(x) at a and b.

55

1.2.3 The Inequality Test for Concavity

Variants of Theorem 1.19

1 A function is convex, if and only if for all a and

b in its domain and any 0 ≤ t ≤ 1 we have

(1 − t)f (a) + tf(

b) ≥ f((1 − t)a + t

b) 0 ≤ t ≤ 1

2 A function is strictly concave, if and only if for all a and

b in its domain and any 0 < t < 1 we

have

(1 − t)f (a) + tf(

b) < f((1 − t)a + t

b) 0 < t < 1

3 A function is strictly convex, if and only if for all a and

b in its domain and any 0 < t < 1 we have

(1 − t)f (a) + tf(

b) > f((1 − t)a + t

b) 0 < t < 1

1.2.4

Verifying Concavity with an Inequality

Verify that f (x) = 5 − x

2

is concave using the inequality condition.

Solution

Let a and b be any two real numbers. We need to show that for all t in [0, 1]:

(1 − t)f (a) + tf(b) ≤ f((1 − t)a + tb)